# 生成数据

class SyntheticRegressionData(d2l.DataModule): #@save

"""Synthetic data for linear regression."""

def __init__(self, w, b, noise=0.01, num_train=1000, num_val=1000,

batch_size=32):

super().__init__()

self.save_hyperparameters()

n = num_train + num_val

self.X = torch.randn(n, len(w))

noise = torch.randn(n, 1) * noise

self.y = torch.matmul(self.X, w.reshape((-1, 1))) + b + noise

或者

1000 个样本,每个两个特征

$\mathbf{w} = [2, -3.4]^\top$、$b = 4.2$

$$\mathbf{y}= \mathbf{X} \mathbf{w} + b + \mathbf\epsilon.$$

def synthetic_data(w, b, num_examples): #@save

"""生成y=Xw+b+噪声"""

X = torch.normal(0, 1, (num_examples, len(w)))

y = torch.matmul(X, w) + b

y += torch.normal(0, 0.01, y.shape)

return X, y.reshape((-1, 1))

# 随便设一下超参数

true_w = torch.tensor([2, -3.4])

true_b = 4.2

features, labels = synthetic_data(true_w, true_b, 1000)

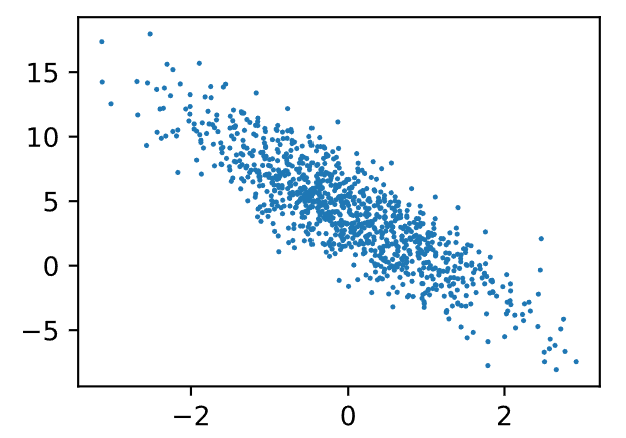

# 看下图像(分布)

d2l.set_figsize()

d2l.plt.scatter(features[:, 1].detach().numpy(), labels.detach().numpy(), 1);

def data_iter(batch_size, features, labels):

num_examples = len(features)

indices = list(range(num_examples))

# 这些样本是随机读取的,没有特定的顺序

random.shuffle(indices)

for i in range(0, num_examples, batch_size):

batch_indices = torch.tensor(

indices[i: min(i + batch_size, num_examples)])

yield features[batch_indices], labels[batch_indices]

## yield 在python中表示返回一个批次的特征和标签。每次调用 data_iter 时,它会生成一个新的批次,直到所有数据都被处理完。

batch_size = 10

#看一眼数据

for X, y in data_iter(batch_size, features, labels):

print(X, '\n', y)

break

w = torch.normal(0, 0.01, size=(2,1), requires_grad=True)

b = torch.zeros(1, requires_grad=True)

def linreg(X, w, b): #@save

"""线性回归模型"""

return torch.matmul(X, w) + b

def squared_loss(y_hat, y): #@save

"""均方损失"""

return (y_hat - y.reshape(y_hat.shape)) ** 2 / 2

# sgd优化算法

def sgd(params, lr, batch_size): #@save

"""小批量随机梯度下降"""

with torch.no_grad():

for param in params:

param -= lr * param.grad / batch_size

param.grad.zero_()

## torch.no_grad()表示 更新参数时不参与梯度计算

这个上下文中,所有涉及张量操作的梯度都不会被计算和存储。如果不加,那在更新参数时,PyTorch 会继续跟踪这些操作并计算梯度。造成浪费。

lr = 0.03

num_epochs = 3

net = linreg

loss = squared_loss

for epoch in range(num_epochs):

for X, y in data_iter(batch_size, features, labels):

l = loss(net(X, w, b), y) # X和y的小批量损失

# 因为l形状是(batch_size,1),而不是一个标量。l中的所有元素被加到一起,

# 并以此计算关于[w,b]的梯度

l.sum().backward()

sgd([w, b], lr, batch_size) # 使用参数的梯度更新参数

with torch.no_grad():

train_l = loss(net(features, w, b), labels)

print(f'epoch {epoch + 1}, loss {float(train_l.mean()):f}')

# epoch 1, loss 0.045634

# epoch 2, loss 0.000185

# epoch 3, loss 0.000052

print(f'w的估计误差: {true_w - w.reshape(true_w.shape)}')

print(f'b的估计误差: {true_b - b}')

w的估计误差: tensor([-0.0002, -0.0004], grad_fn=<SubBackward0>)

b的估计误差: tensor([0.0012], grad_fn=<RsubBackward1>)

2. Concise Pytorch简介实现

# torch.util里自带的Data的API (data.TensorDataset,Dataloader)

def load_array(data_arrays, batch_size, is_train=True): #@save

"""构造一个PyTorch数据迭代器"""

dataset = data.TensorDataset(*data_arrays)

return data.DataLoader(dataset, batch_size, shuffle=is_train)

batch_size = 10

data_iter = load_array((features, labels), batch_size)

next(iter(data_iter))

# nn是神经网络的缩写

from torch import nn

net = nn.Sequential(nn.Linear(2, 1))

# 初始化,XB填N(0,0.01)和0

net[0].weight.data.normal_(0, 0.01)

net[0].bias.data.fill_(0)

loss = nn.MSELoss()

trainer = torch.optim.SGD(net.parameters(), lr=0.03)

num_epochs = 3

for epoch in range(num_epochs):

for X, y in data_iter:

l = loss(net(X) ,y)

trainer.zero_grad()

l.backward()

trainer.step()

l = loss(net(features), labels)

print(f'epoch {epoch + 1}, loss {l:f}')

# epoch 1, loss 0.000103

# epoch 2, loss 0.000104

# epoch 3, loss 0.000103

w = net[0].weight.data

print('w的估计误差:', true_w - w.reshape(true_w.shape))

b = net[0].bias.data

print('b的估计误差:', true_b - b)

w的估计误差: tensor([1.6212e-05, 3.0875e-04])

b的估计误差: tensor([-0.0002])

with 是python上下文管理器,语法如下:

with expression as variable:

# 代码块

比如打开文件:

with open('example.txt', 'r') as file:

contents = file.read()

print(contents)

next(iterator[, default])

# 创建一个简单的迭代器

numbers = iter([1, 2, 3, 4, 5])

print(next(numbers)) # 输出 1

print(next(numbers)) # 输出 2

print(next(numbers)) # 输出 3

print(next(numbers)) # 输出 4

print(next(numbers)) # 输出 5

# 如果继续调用 next(numbers),会引发 StopIteration 异常

迭代器是一个实现了迭代协议的对象,具体来说,它实现了 __iter__() 和 __next__() 方法。

def simple_generator():

yield 1

yield 2

yield 3

gen = simple_generator()

print(next(gen)) # 输出 1

print(next(gen)) # 输出 2

print(next(gen)) # 输出 3