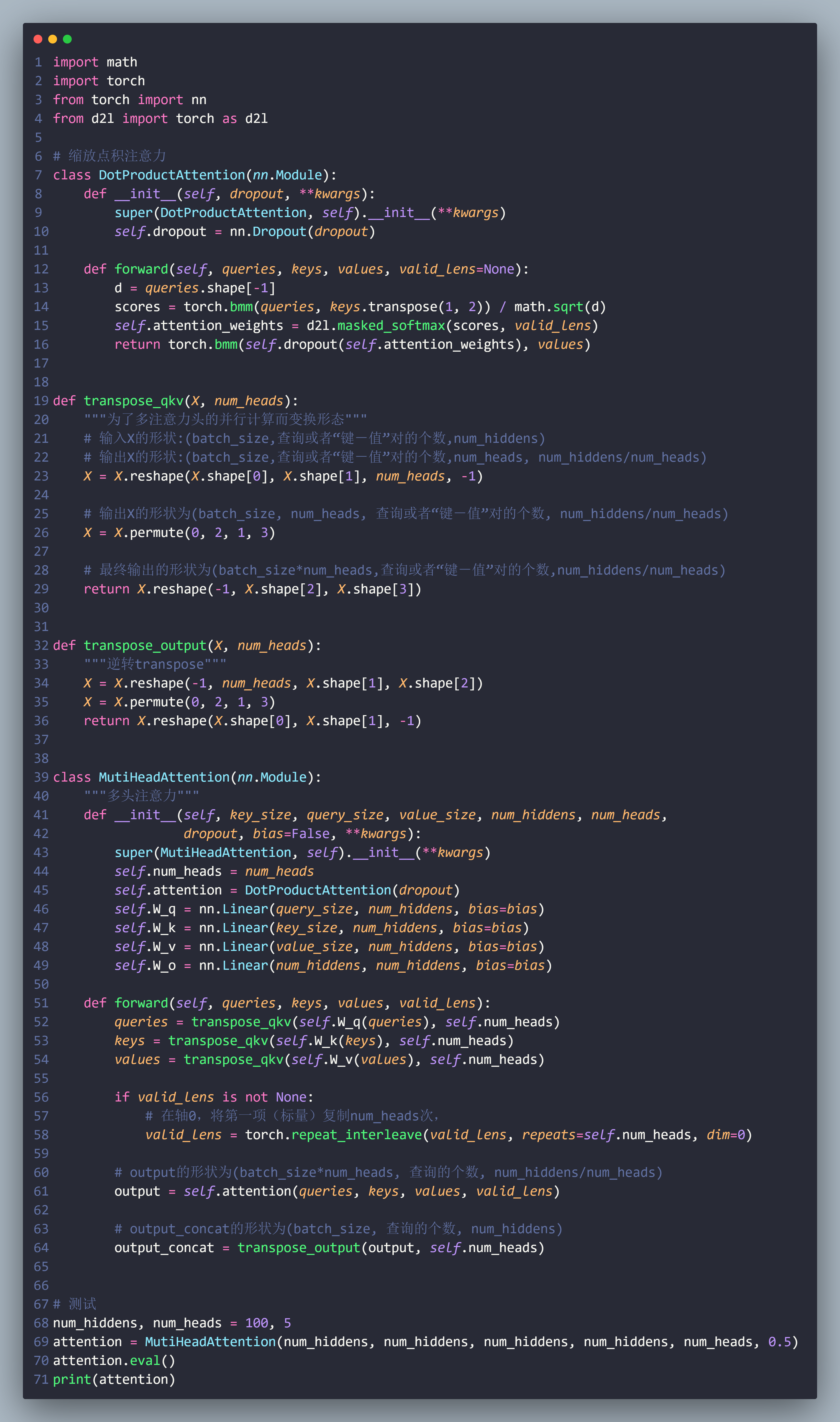

import math

import torch

from torch import nn

from d2l import torch as d2l

# 缩放点积注意力

class DotProductAttention(nn.Module):

def __init__(self, dropout, **kwargs):

super(DotProductAttention, self).__init__(**kwargs)

self.dropout = nn.Dropout(dropout)

def forward(self, queries, keys, values, valid_lens=None):

d = queries.shape[-1]

scores = torch.bmm(queries, keys.transpose(1, 2)) / math.sqrt(d)

self.attention_weights = d2l.masked_softmax(scores, valid_lens)

return torch.bmm(self.dropout(self.attention_weights), values)

def transpose_qkv(X, num_heads):

"""为了多注意力头的并行计算而变换形态"""

# 输入X的形状:(batch_size,查询或者“键-值”对的个数,num_hiddens)

# 输出X的形状:(batch_size,查询或者“键-值”对的个数,num_heads, num_hiddens/num_heads)

X = X.reshape(X.shape[0], X.shape[1], num_heads, -1)

# 输出X的形状为(batch_size, num_heads, 查询或者“键-值”对的个数, num_hiddens/num_heads)

X = X.permute(0, 2, 1, 3)

# 最终输出的形状为(batch_size*num_heads,查询或者“键-值”对的个数,num_hiddens/num_heads)

return X.reshape(-1, X.shape[2], X.shape[3])

def transpose_output(X, num_heads):

"""逆转transpose"""

X = X.reshape(-1, num_heads, X.shape[1], X.shape[2])

X = X.permute(0, 2, 1, 3)

return X.reshape(X.shape[0], X.shape[1], -1)

class MutiHeadAttention(nn.Module):

"""多头注意力"""

def __init__(self, key_size, query_size, value_size, num_hiddens, num_heads,

dropout, bias=False, **kwargs):

super(MutiHeadAttention, self).__init__(**kwargs)

self.num_heads = num_heads

self.attention = DotProductAttention(dropout)

self.W_q = nn.Linear(query_size, num_hiddens, bias=bias)

self.W_k = nn.Linear(key_size, num_hiddens, bias=bias)

self.W_v = nn.Linear(value_size, num_hiddens, bias=bias)

self.W_o = nn.Linear(num_hiddens, num_hiddens, bias=bias)

def forward(self, queries, keys, values, valid_lens):

queries = transpose_qkv(self.W_q(queries), self.num_heads)

keys = transpose_qkv(self.W_k(keys), self.num_heads)

values = transpose_qkv(self.W_v(values), self.num_heads)

if valid_lens is not None:

# 在轴0,将第一项(标量)复制num_heads次,

valid_lens = torch.repeat_interleave(valid_lens, repeats=self.num_heads, dim=0)

# output的形状为(batch_size*num_heads, 查询的个数, num_hiddens/num_heads)

output = self.attention(queries, keys, values, valid_lens)

# output_concat的形状为(batch_size, 查询的个数, num_hiddens)

output_concat = transpose_output(output, self.num_heads)

# 测试

num_hiddens, num_heads = 100, 5

attention = MutiHeadAttention(num_hiddens, num_hiddens, num_hiddens, num_hiddens, num_heads, 0.5)

attention.eval()

print(attention)

更好看的版本

你这个在哪里显示的,高亮还挺好看的。想搞

vscode的一个截图插件codesnap

更好看的可太秀了。点赞