两个重要函数

dir(): 打开,看见

help(): 说明书

dir(pytorch) 输出: 1,2,3,4

dir(pytorch.3) 输出: a,b,c

help(pytorch.3.a) 输出:将此板手放在特定地方,然后拧动

总结:

dir()函数,能让我们知道工具性以及工具箱的分隔区有什么东西。

help()函数,能让我们知道每个工具是如何使用的,工具的使用方法。

pycharm以及Jupyter的使用对比

代码以块为一个整体运行的话

1. python文件的块是所有行的代码

优点:通用,传播方便,适用于大型项目

缺点:需要从头运行

2. python控制台

优点:显示每个变量属性值

缺点:不利于代码阅读及修改

3. Jupyter

优点:利于代码阅读及修改

缺点:环境需要配置

PyTorch加载数据初认识

Dataset:提供一种方式去获取数据及其label

Dataloader:为后面的网络提供不同的数据形式

Dataset代码实战

import torch

from torch.utils.data import Dataset

from PIL import Image

import os

class MyData(Dataset):

def __init__(self, root_dir, label_dir):

self.root_dir = root_dir

self.label_dir = label_dir

self.path = os.path.join(self.root_dir, self.label_dir)

self.img_path = os.listdir(self.path)

def __getitem__(self, idx):

img_name = self.img_path[idx]

img_item_path = os.path.join(self.root_dir, self.label_dir, img_name)

img = Image.open(img_item_path)

label = self.label_dir

return img, label

def __len__(self):

return len(self.img_path)

if __name__ == '__main__':

root_dir = 'dataset/train'

ants_label_dir = 'ants_image'

bees_label_dir = 'bees_image'

ants_dataset = MyData(root_dir, ants_label_dir)

bees_dataset = MyData(root_dir, bees_label_dir)

train_loader = ants_dataset + bees_dataset

步骤:

1. 先获取数据集的根路径,然后获取子路径 最后将两个路径进行拼接

2. os.path.join():将两个路径进行拼接

3. os.listdir(): 返回数据集列表

4. __getitem__方法通常用于在数据集类中以索引的方式获取单个样本,在训练过程中可以通过dataset[i]的方式获取

数据集中第i个样本。

5. 自定义的__getitem__方法,用于获取数据集中的单个样本。在该方法中,首先根据输入的索引值idx从数据集的img_path

中获取对应的图片文件名img_name,然后根据图片文件名构建完整的图片文件路径

img_item_path。接着使用PIL库的Image.open()方法打开图片文件,将图片对象赋值给变量img。最后,将图像对象img和

标签label_dir作为元组的形式返回。

当数据集的label过长的时候,我们就创建一个label的text文件

代码:

import os

root_dir = 'dataset/train'

target_dir = 'ants_image'

img_path = os.listdir(os.path.join(root_dir, target_dir))

label = target_dir.split('_')[0]

out_dir = 'ants_label'

for i in img_path:

file_name = i.split('.jpg')[0]

with open(os.path.join(root_dir, out_dir, "{}.txt".format(file_name)), 'w') as f:

f.write(label)

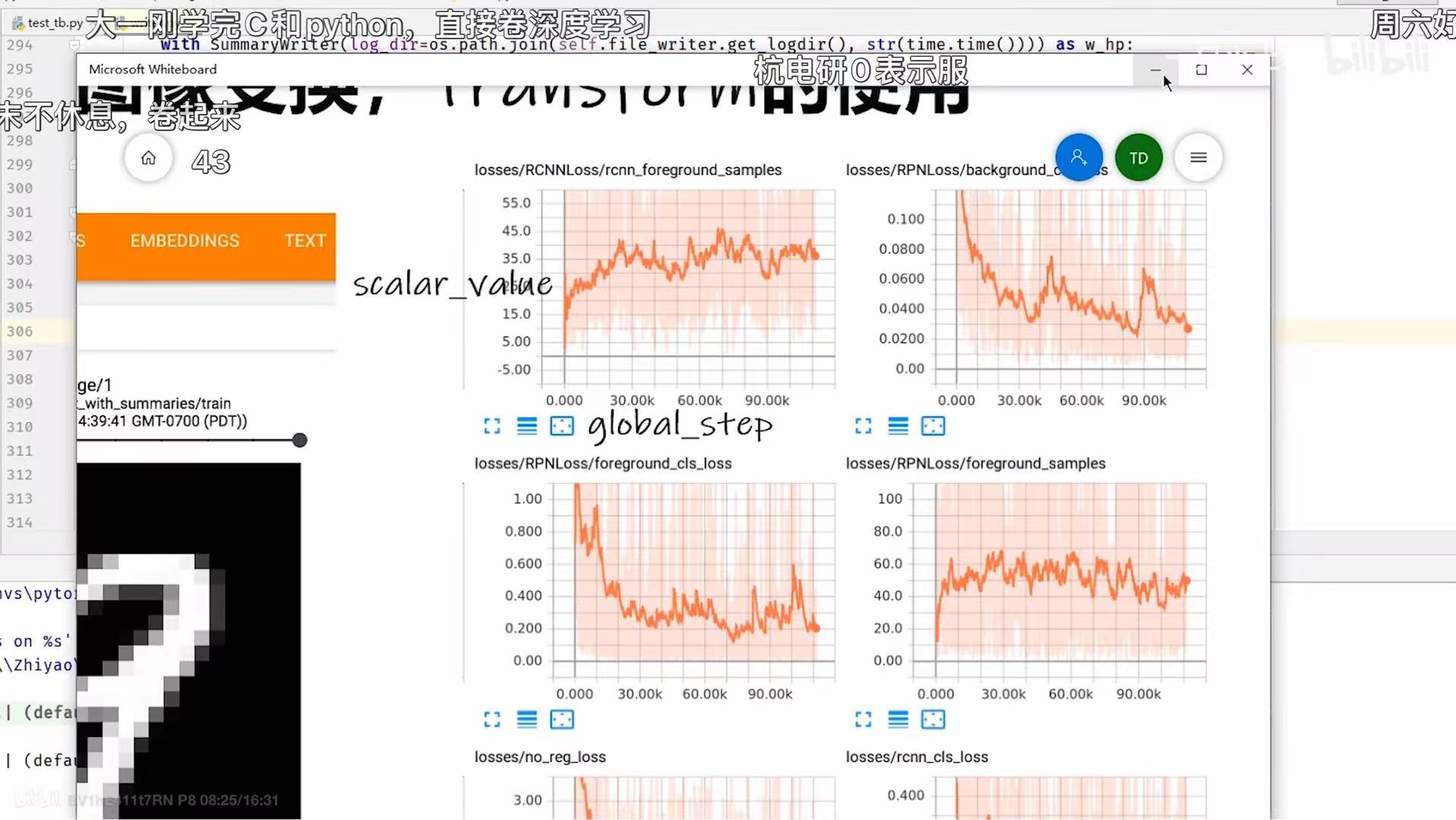

TensorBoard的使用

logdir=事件文件所在文件夹名

tensorboard --logdir=logs --port=6007

from torch.utils.tensorboard import SummaryWriter

import numpy as np

from PIL import Image

writer = SummaryWriter('logs')

image_path = "data/train/bees_image/16838648_415acd9e3f.jpg"

img_PIL = Image.open(image_path)

img_array = np.array(img_PIL)

print(type(img_array))

print(img_array.shape)

writer.add_image("train", img_array, 2, dataformats='HWC')

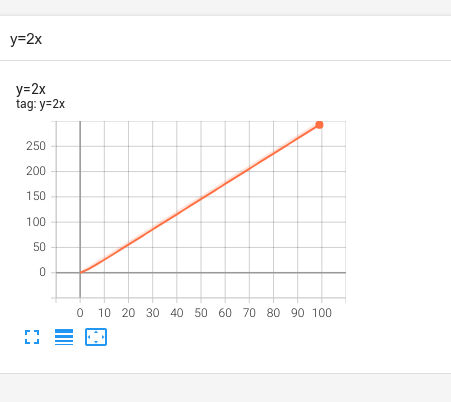

for i in range(100):

writer.add_scalar("y=2x", 3*i, i)

writer.close()

注意:

add_image传入参数的类型如下:

img_tensor (torch.Tensor, numpy.array, or string/blobname): Image data

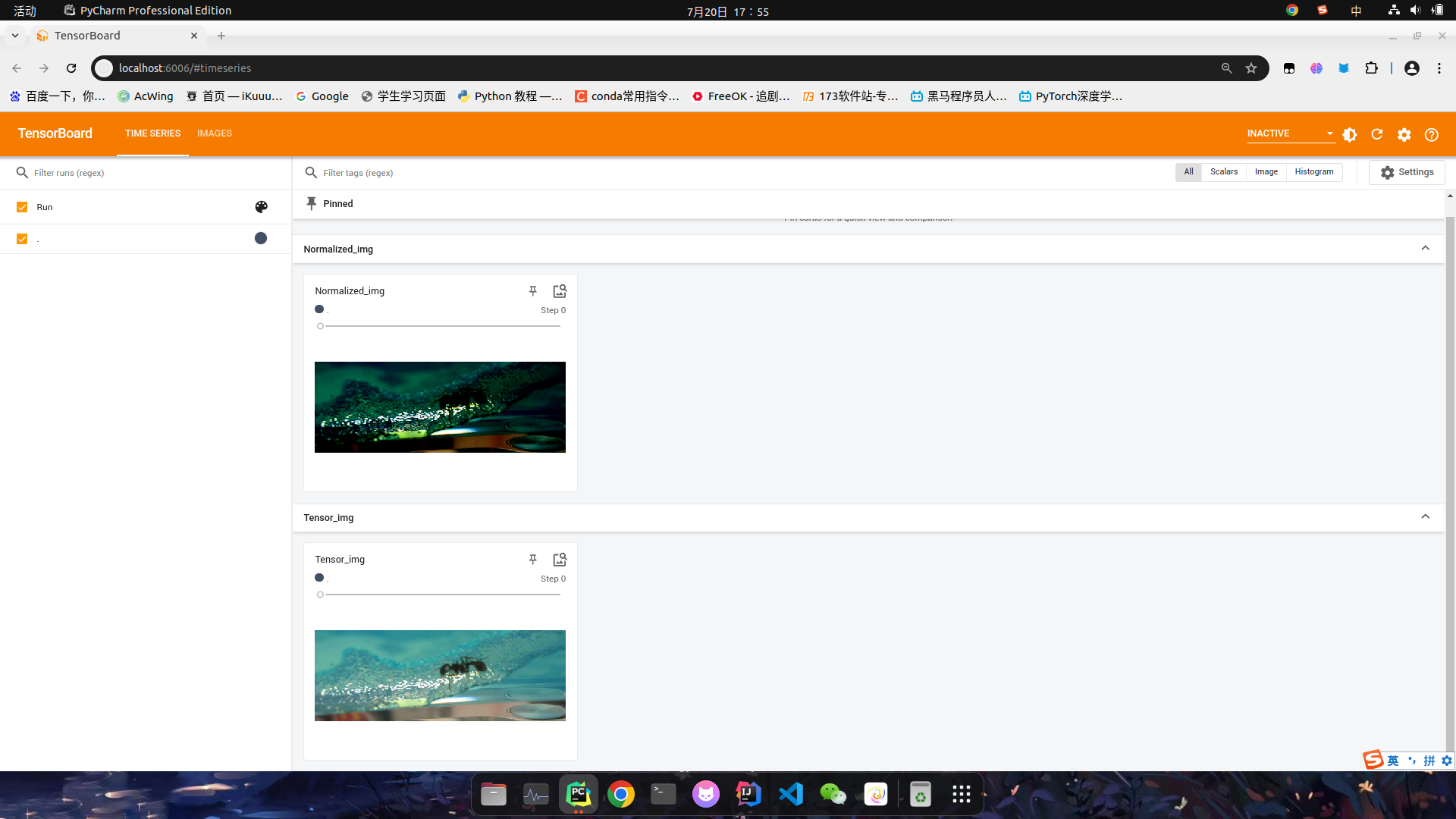

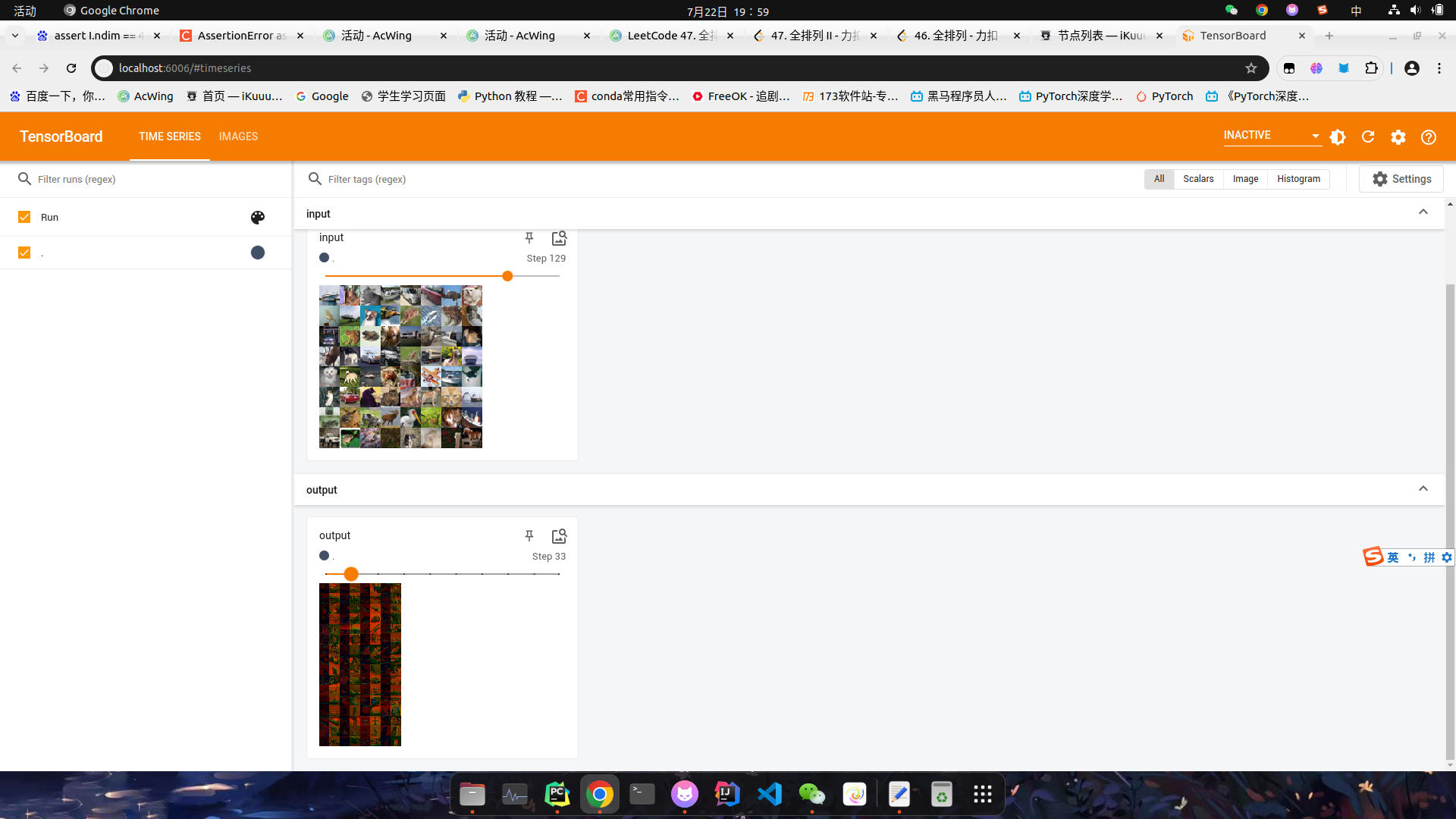

效果图如下:

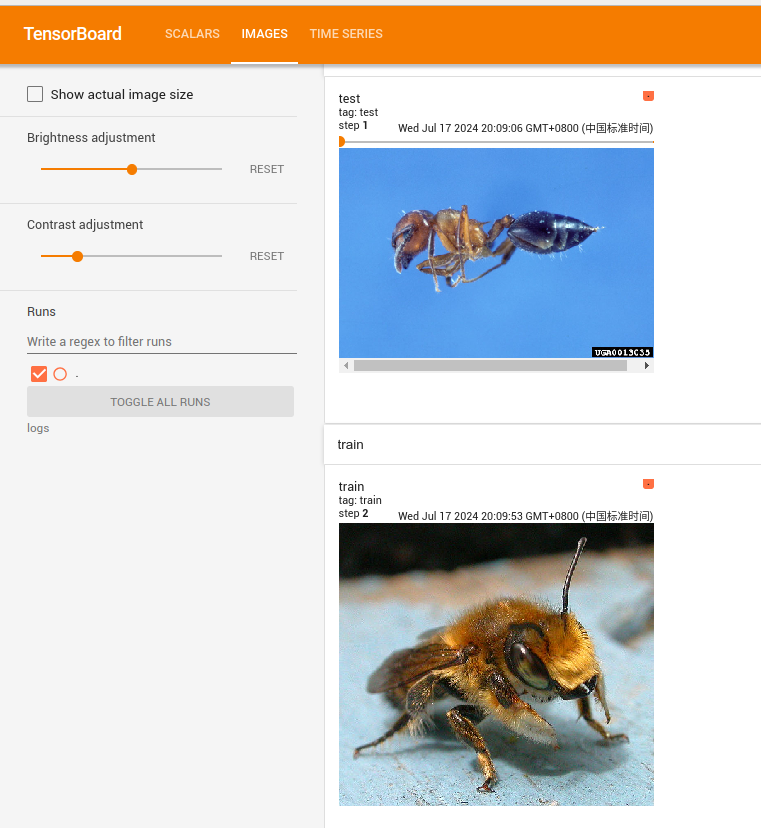

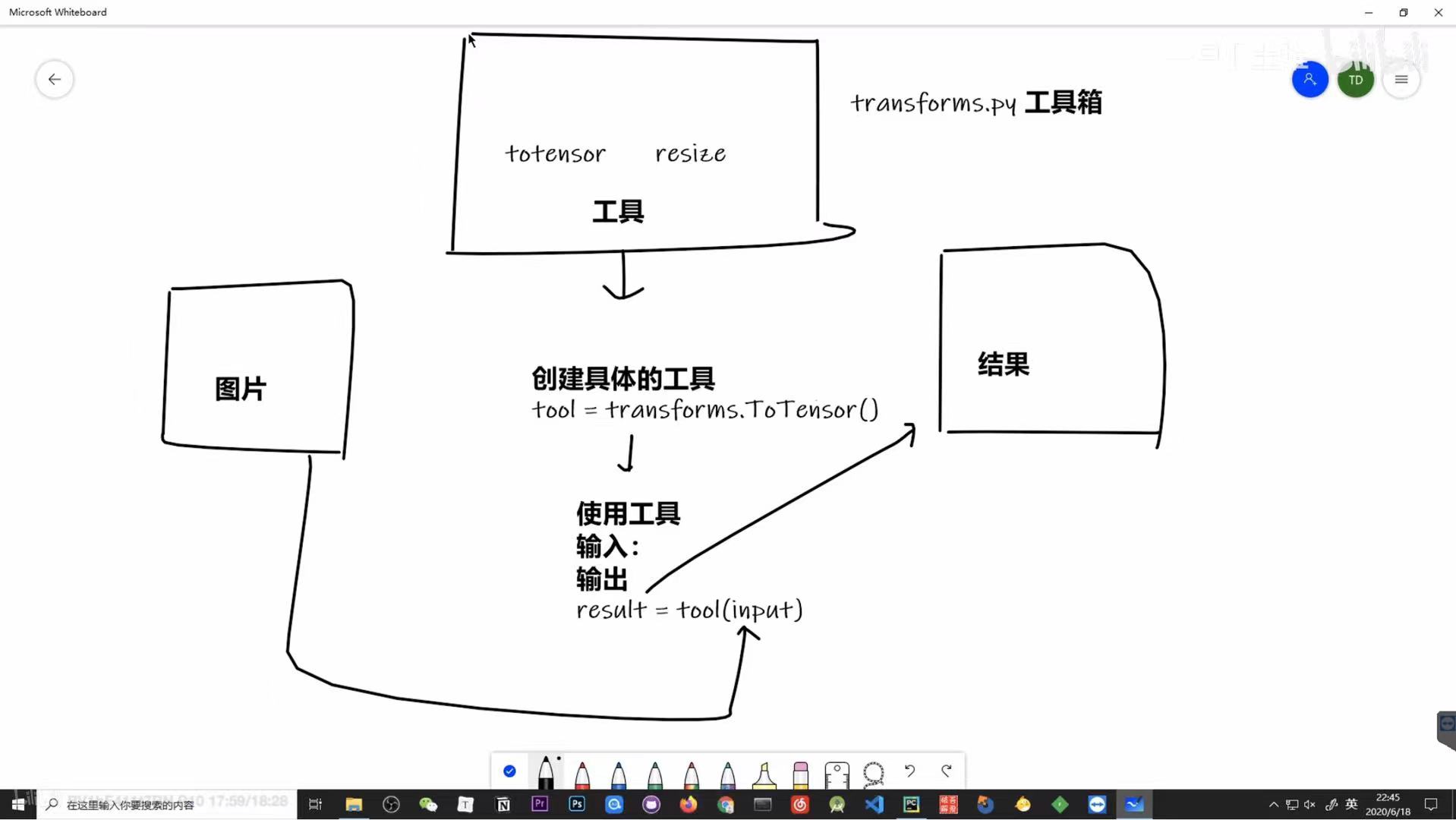

Transforms的使用

代码:

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

# python的用法 -》 tensor数据类型

# 通过 transforms.ToTensor去看两个问题

# 2. 为什么我们需要Tensor数据类型

img_path = "data/train/ants_image/0013035.jpg"

img = Image.open(img_path)

writer = SummaryWriter("logs")

# 1. transforms该如何使用(python)

tensor_trans = transforms.ToTensor()

tensor_img = tensor_trans(img)

print(tensor_img)

writer.add_image("Tensor_img", tensor_img)

writer.close()

Normalize归一化

# Normalize

print(img_tensor[0][0][0])

trans_norm = transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

img_norm = trans_norm(img_tensor)

print(img_norm[0][0][0])

writer.add_image("Normalized_img", img_norm)

效果图:

Resize和Compose

# Resize

print(img.size)

trans_resize = transforms.Resize((512, 512))

# img PIL -> resize -> img_resize PIL

img_resize = trans_resize(img)

# img_resize PIL -> Totensor -> img_resize tensor

img_resize = tensor_trans(img_resize)

writer.add_image("Resized", img_resize, 0)

print(img_resize)

# Compose - resize - 2

trans_resize_2 = transforms.Resize(512)

# PIL -> PIL -> tensor

trans_compose = transforms.Compose([trans_resize_2, tensor_trans])

img_resize_2 = trans_compose(img)

writer.add_image("Resized", img_resize_2, 1)

RandomCrop裁剪

# RandomCrop

trans_random = transforms.RandomCrop(500, 181)

trans_compose_2 = transforms.Compose([trans_random, tensor_trans])

for i in range(10):

img_crop = trans_compose_2(img)

writer.add_image("RandomCrop", img_crop, i)

注意事项

1. 关注输入和输出数据类型

2. 多看官方文档

3. 关注方法需要什么参数

4. 不知道返回值得时候

print(*), print(type(*)),debug

torchvision中的数据集使用

import torchvision

from torch.utils.tensorboard import SummaryWriter

dataset_transform = torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

])

train_set = torchvision.datasets.CIFAR10(root="./dataset", train=True, transform=dataset_transform, download=True)

test_set = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=dataset_transform, download=True)

# print(train_set[0])

# print(test_set.classes)

#

# img, target = train_set[0]

# print(img)

# print(target)

# print(test_set.classes[target])

# img.show()

print(test_set[0])

writer = SummaryWriter("p10")

for i in range(10):

img, target = test_set[i]

writer.add_image("test_set", img, i)

writer.close()

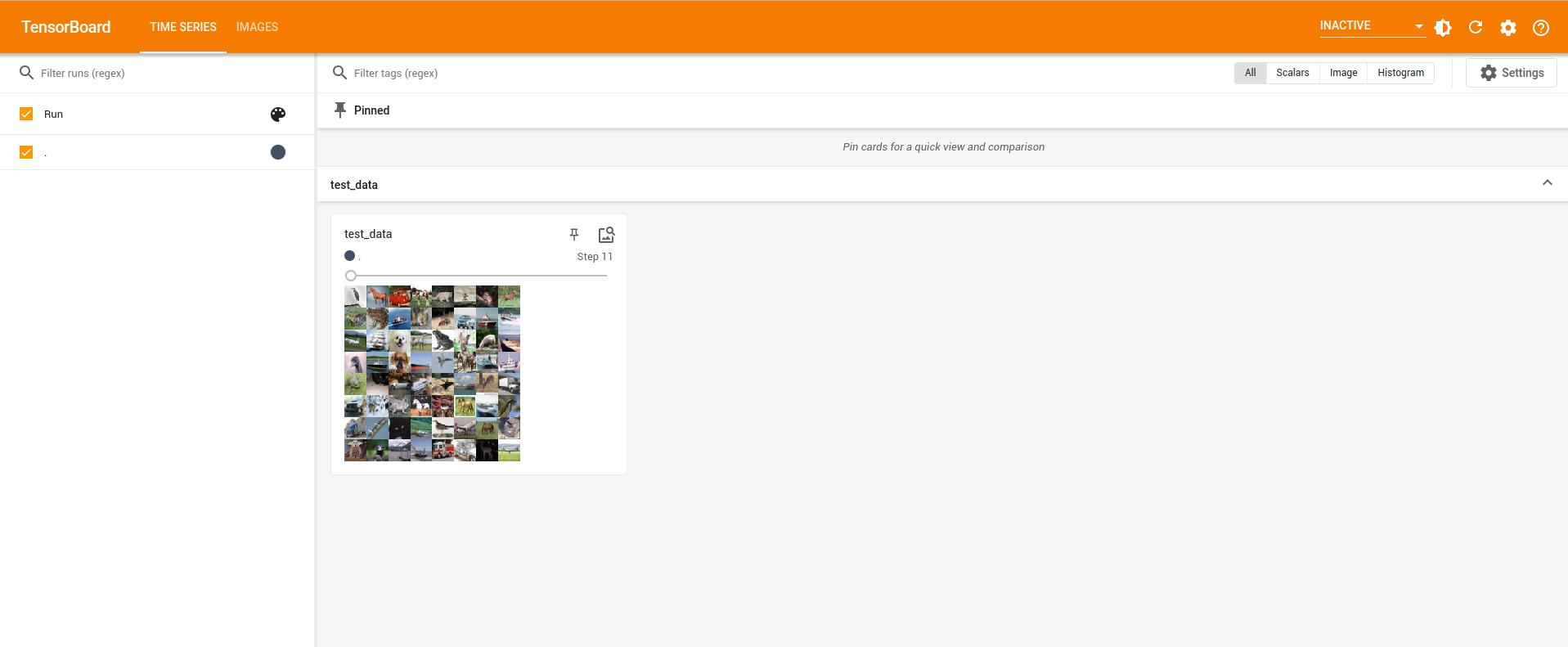

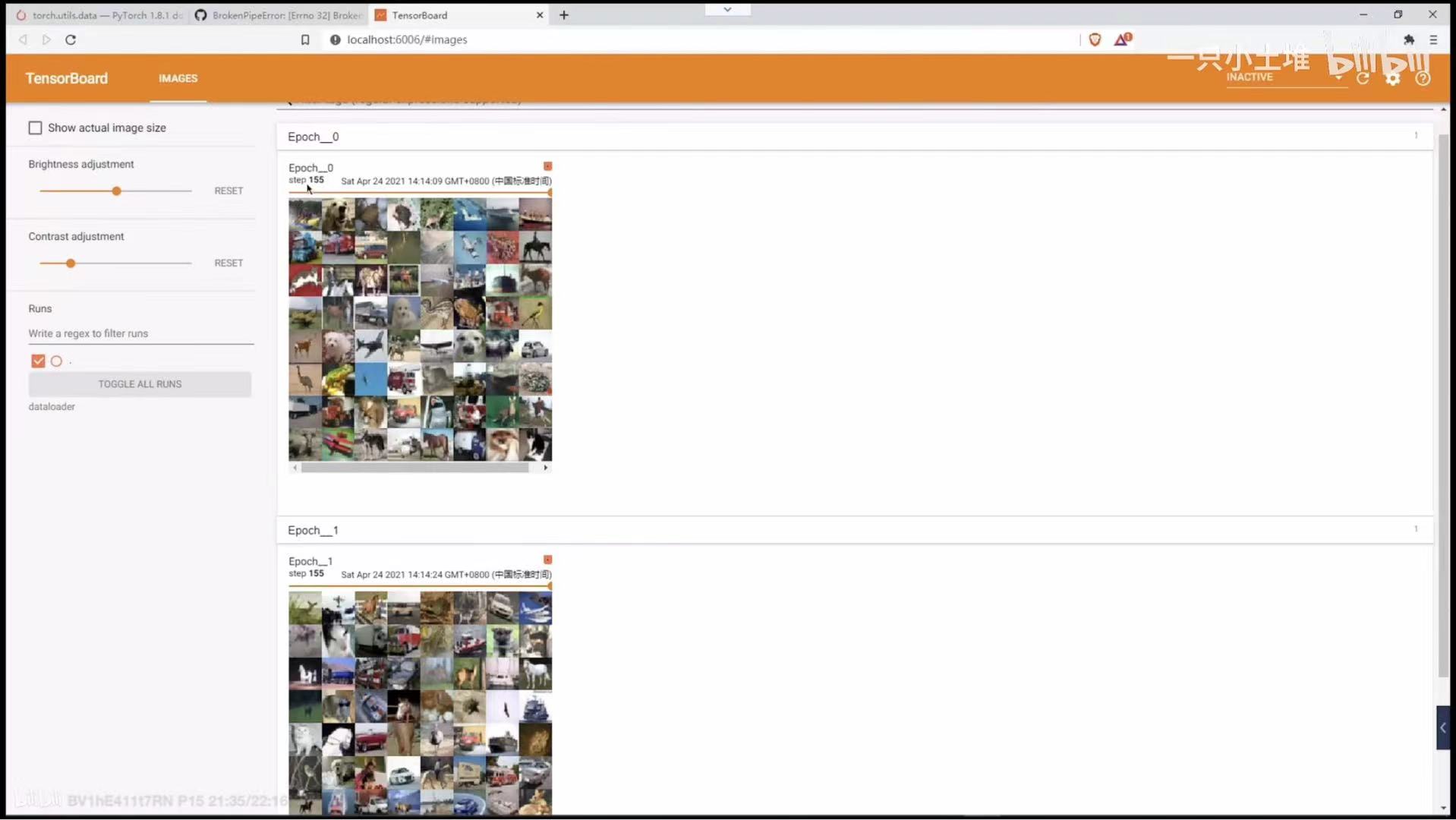

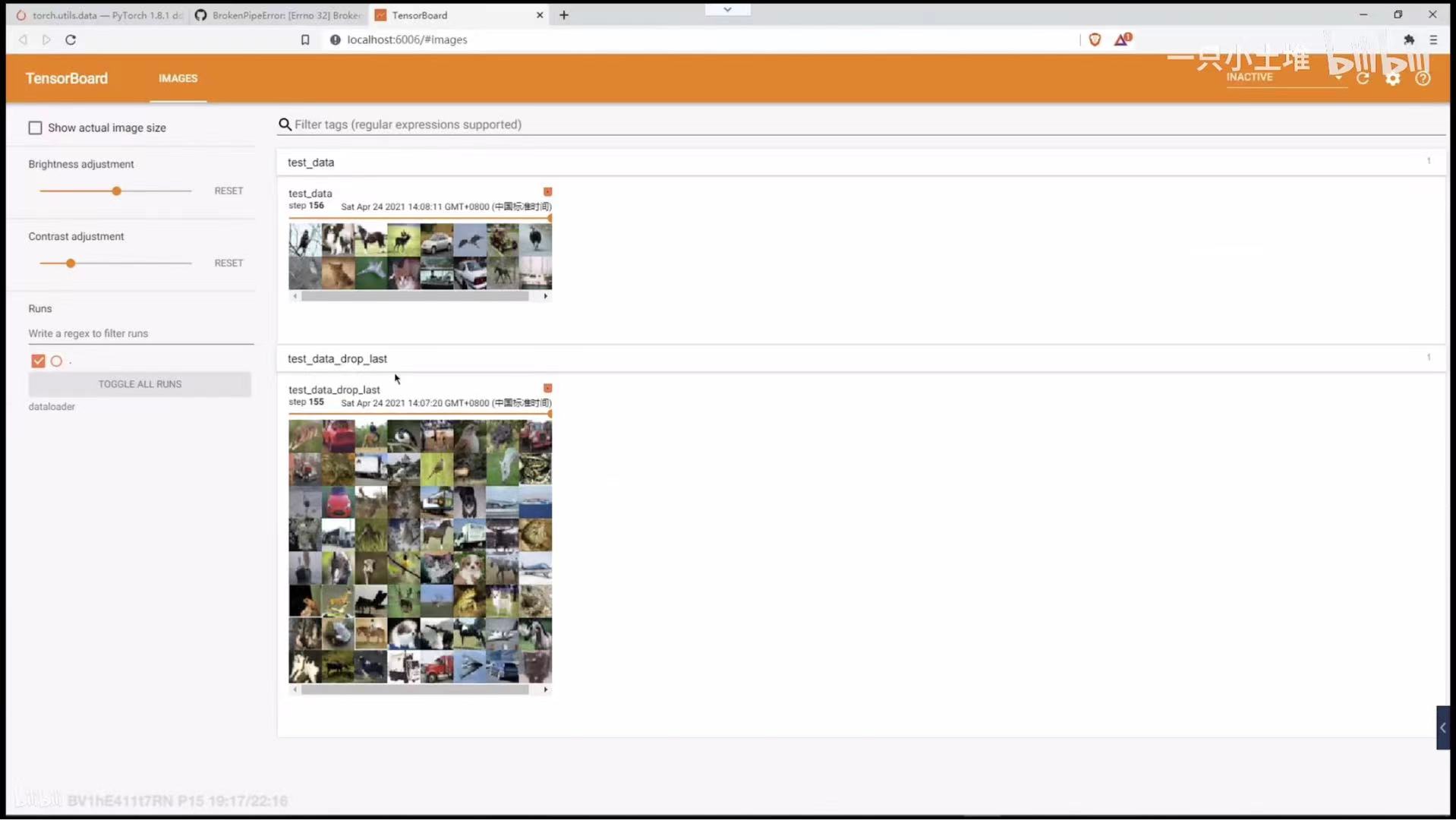

dataloader的使用

如果dataset是一堆扑克牌的话,那么dataloader就是从这一堆扑克牌中抽取几张进行操作

dataloader中存在很多方法对扑克牌进行操作

batch_size="":从数据集中选择多少张图片显示

shuffle=True:当显示数据集得最后一页时打乱选取的图片。

drop_last=False:最后一页是否显示完全。

代码示例:

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

# 准备测试数据集

test_data = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(dataset=test_data, batch_size=64, shuffle=True, num_workers=0, drop_last=False)

# 测试数据集中第一张图片及target(标签存放的位置)

img, target = test_data[0]

print(img.shape)

print(target)

writer = SummaryWriter('dataloader')

for epoch in range(2):

step = 0

for data in test_loader:

imgs, targets = data

# print(imgs.shape)

# print(targets)

writer.add_images('test_data', imgs, step)

step = step + 1

writer.close()

shuffer=True效果图:

drop_last=False效果图:

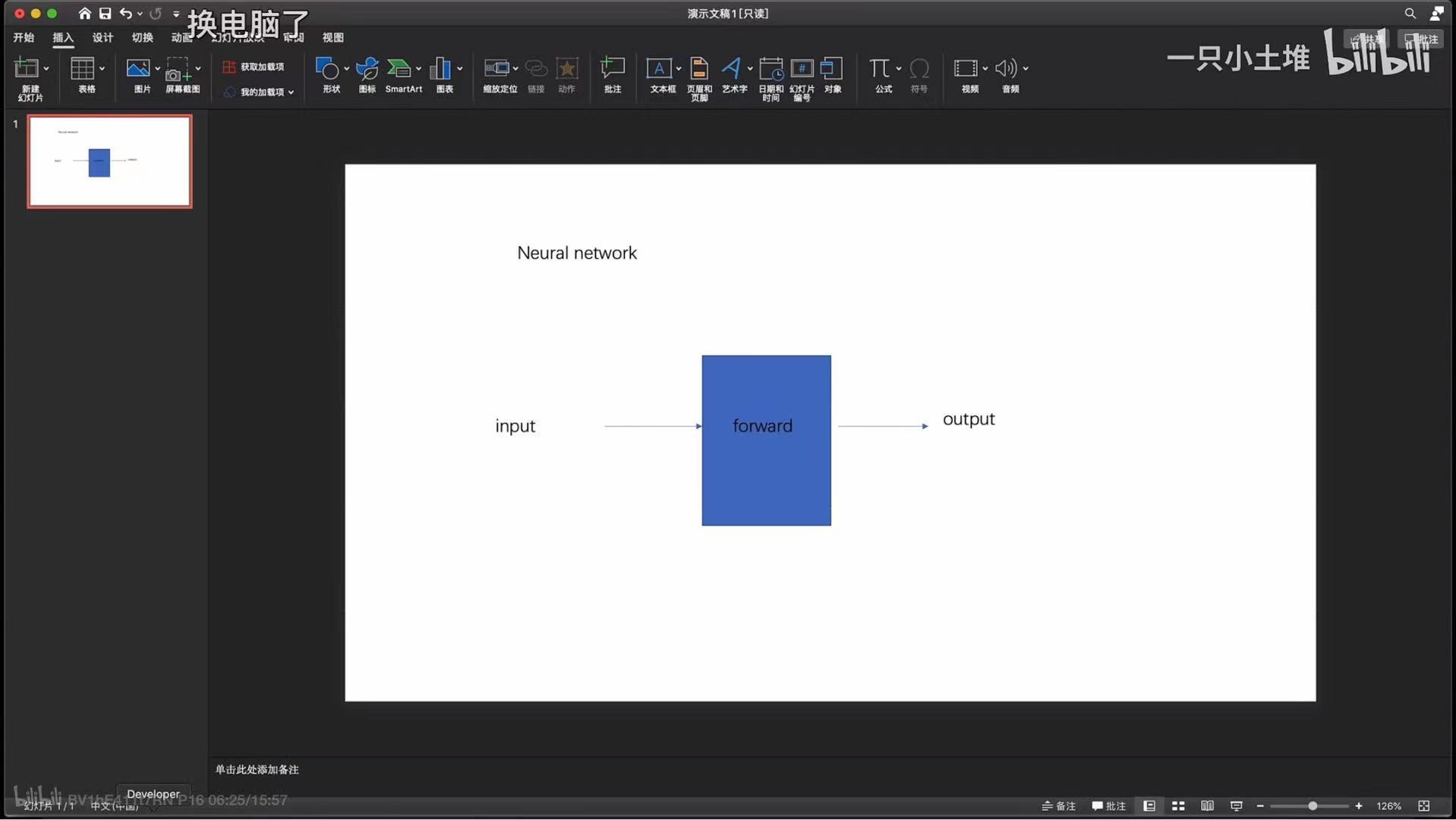

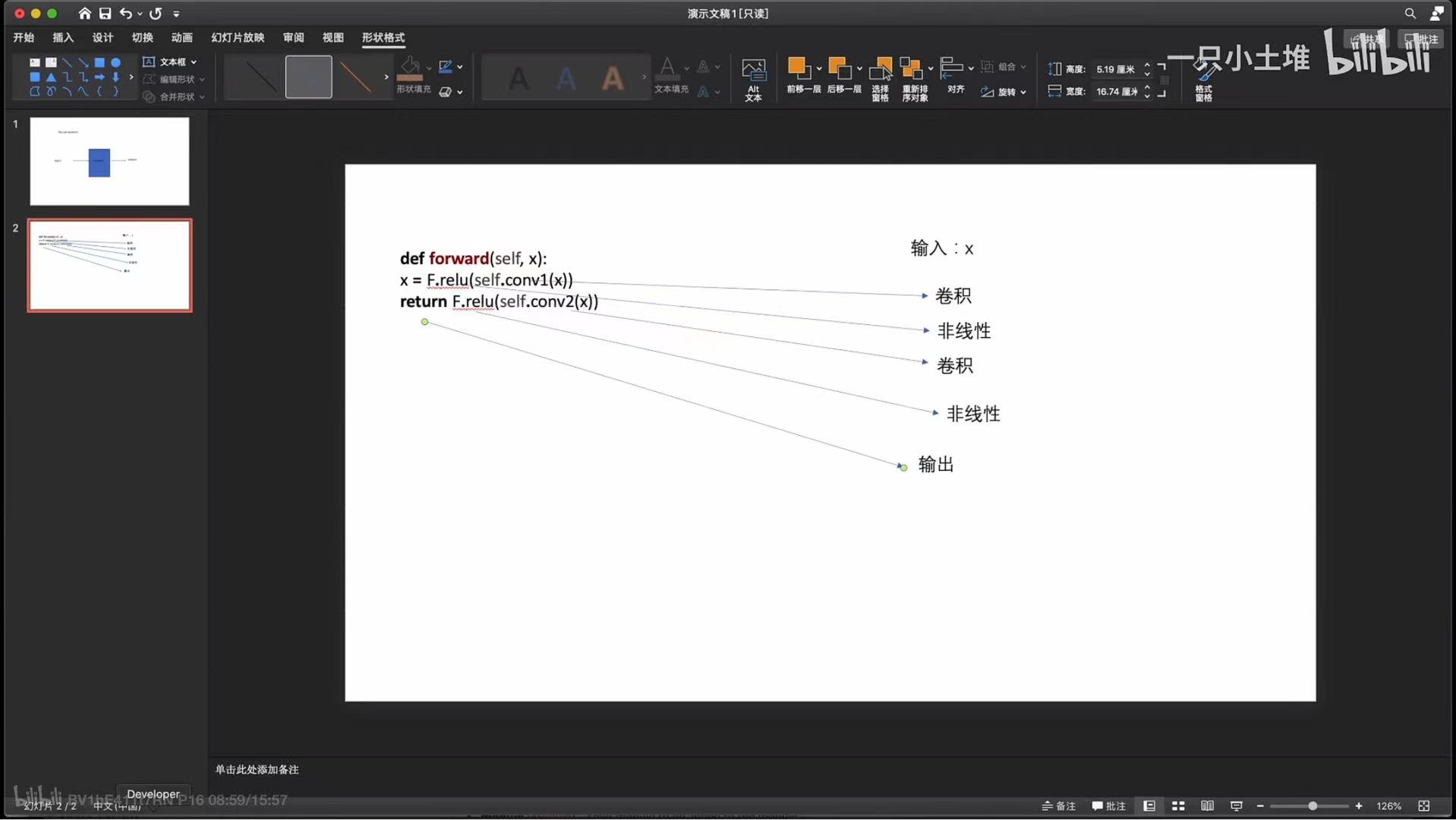

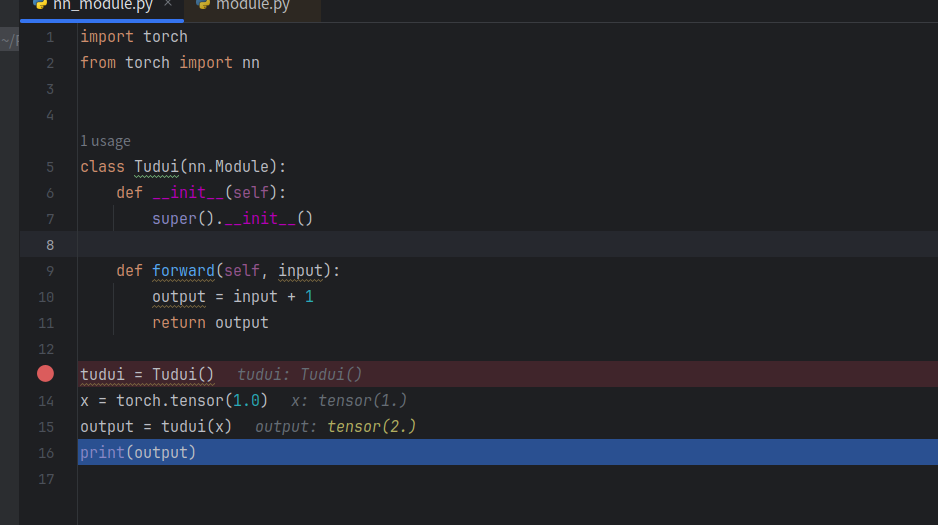

神经网络的基本骨架——nn.Modlue的基本使用

import torch

from torch import nn

class Tudui(nn.Module):

def __init__(self):

super().__init__()

def forward(self, input):

output = input + 1

return output

tudui = Tudui()

x = torch.tensor(1.0)

output = tudui(x)

print(output)

解释:

forward方法:对神经网络进行操作

对于output = tudui(x)的理解:Tudui继承了nn.Module,nn.Module中包含___call__函数,___call__函数可以实例化对象并在函数中调用forward

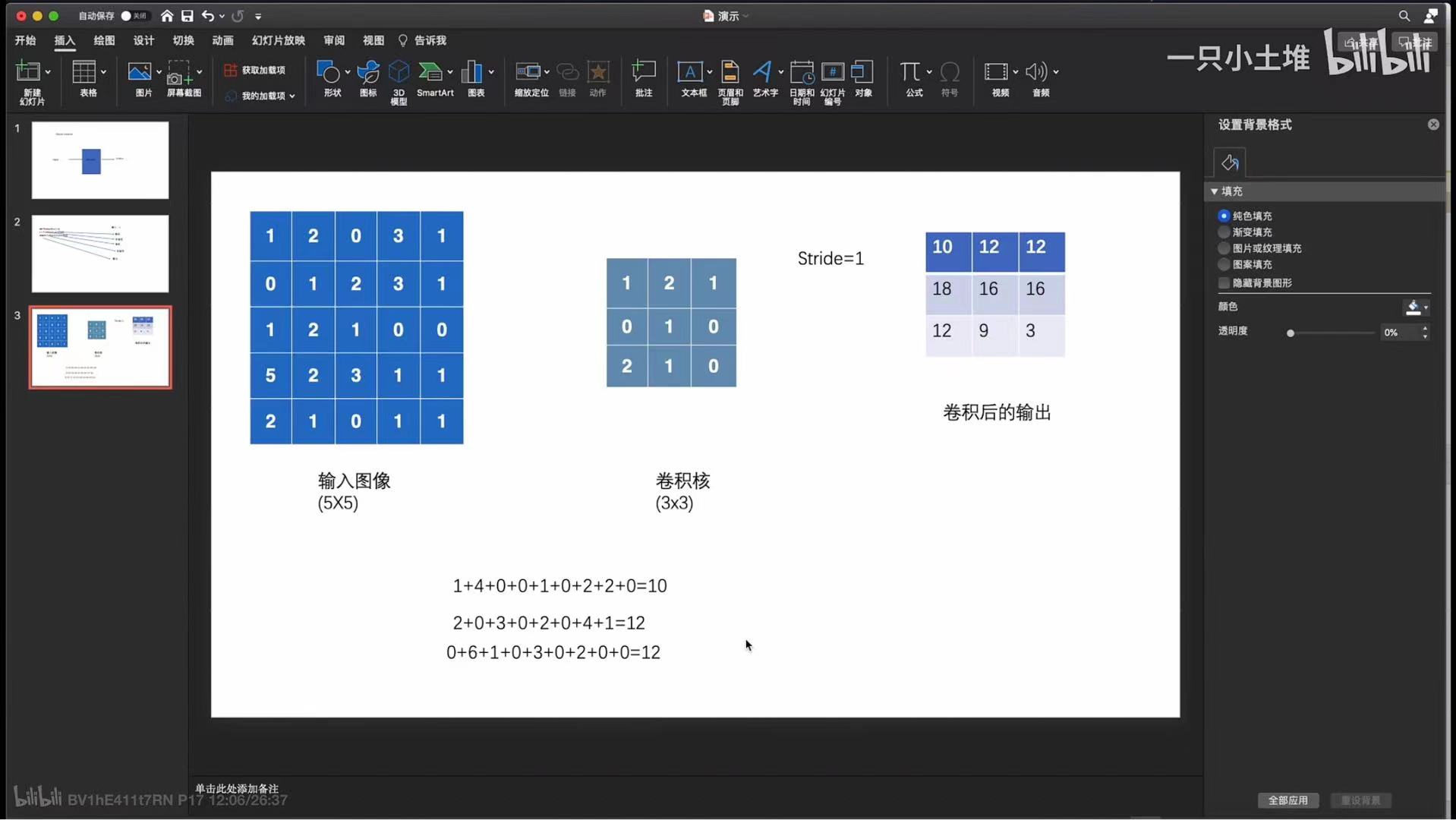

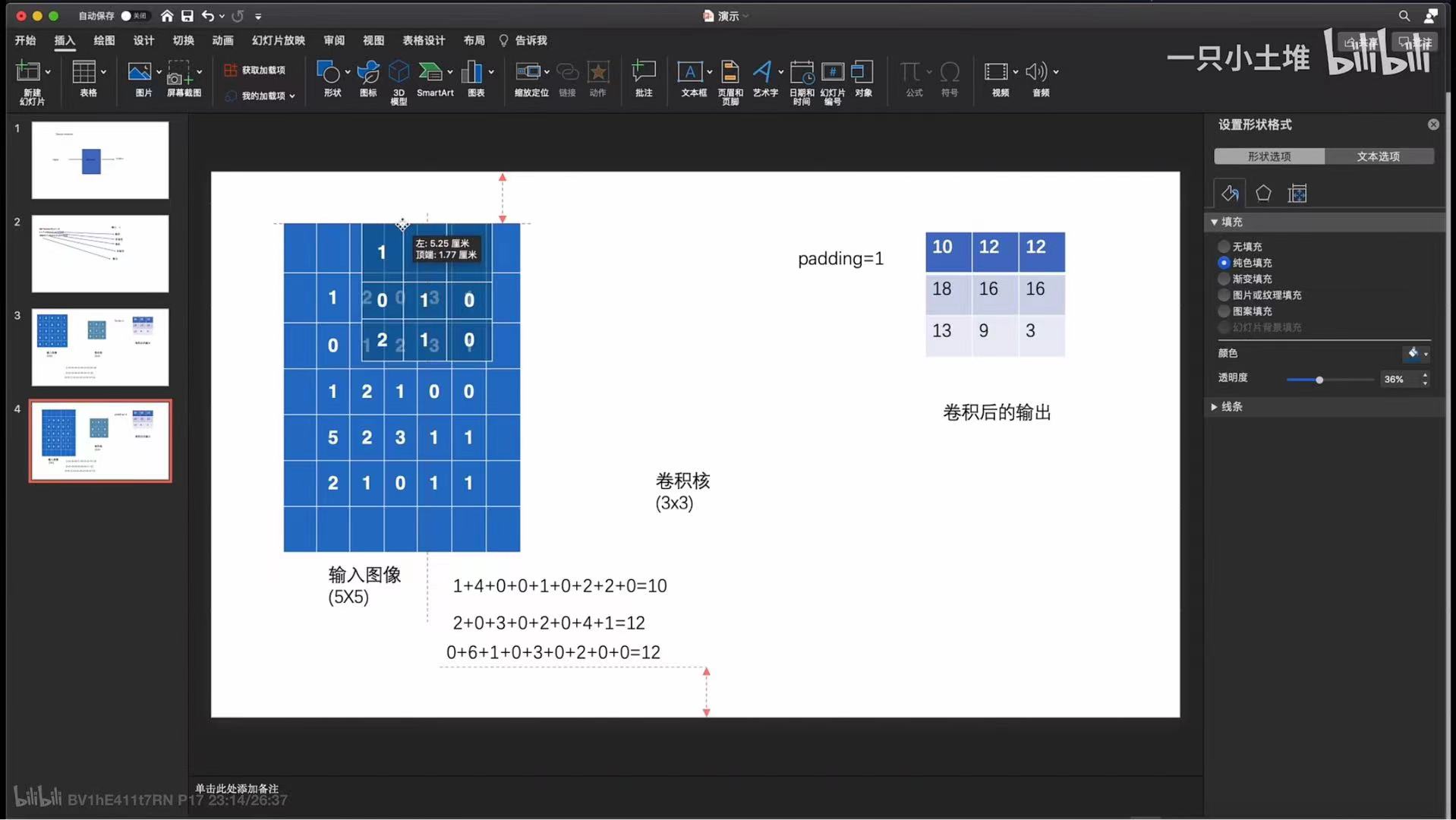

torch.nn.functional.conv2d卷积操作

卷积操作示例图:

其实就是拿着卷积核去训练卷积层,得到卷积后的数据

Stride:表示步数

Padding:表示输入图像周围的填充

示例图:

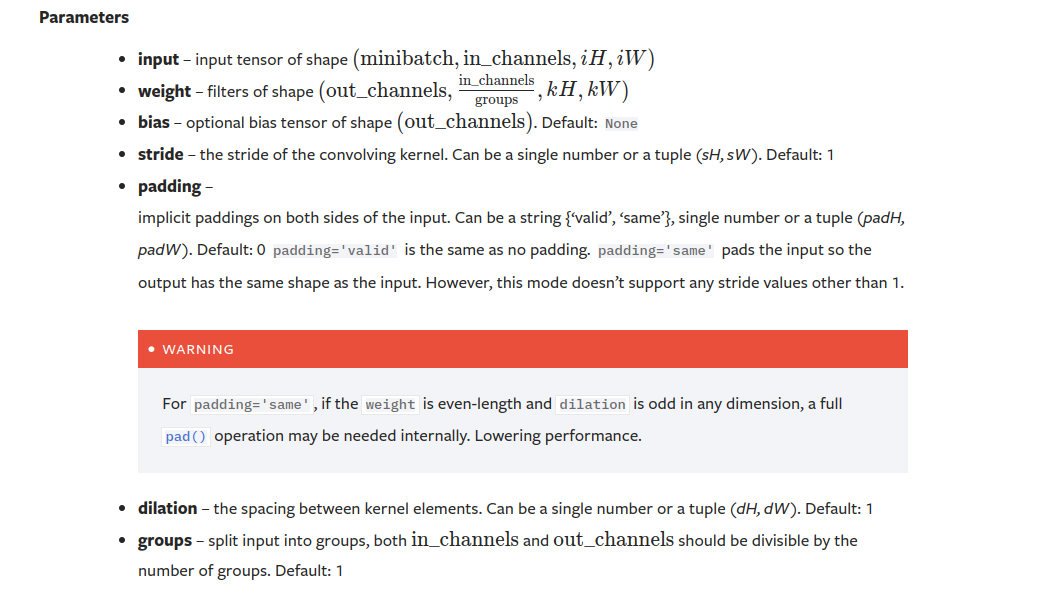

对conv2d的参数解析:

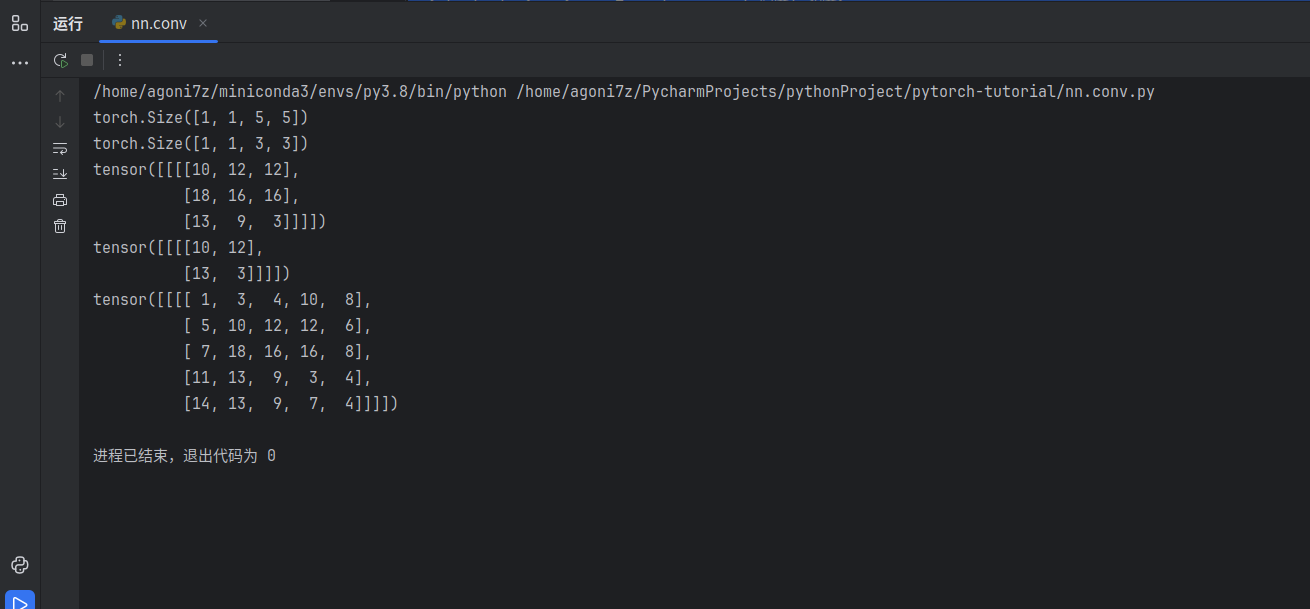

代码示例:

import torch

import torch.nn.functional as F

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]])

kernel = torch.tensor([[1, 2, 1],

[0, 1, 0],

[2, 1, 0]])

# (B, C, H, D) = (batch_size, channel, 高度, 宽度)

# 这里定义了一个维度为1的batch_size,维度为1的通道数,以及一个5*5的空间维度

input = torch.reshape(input, (1, 1, 5, 5))

kernel = torch.reshape(kernel, (1, 1, 3, 3))

print(input.shape)

print(kernel.shape)

output = F.conv2d(input, kernel, stride=1)

print(output)

output2 = F.conv2d(input, kernel, stride=2)

print(output2)

output3 = F.conv2d(input, kernel, stride=1, padding=1)

print(output3)

输出结果:

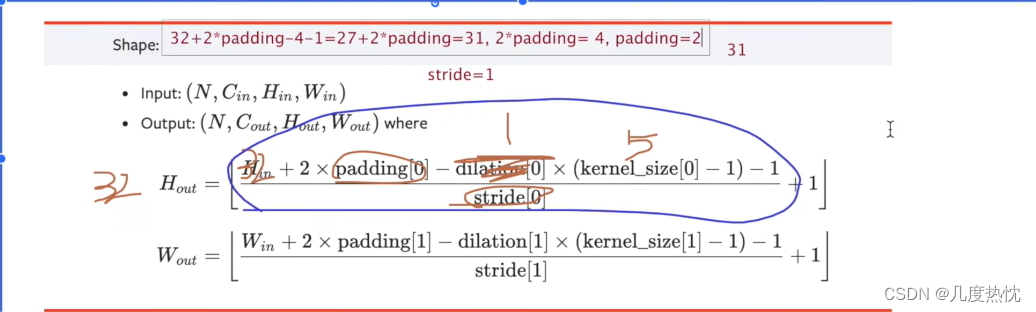

神经网络–卷积层

前置知识:

卷积运算:卷积核在输入图像上滑动,相应位置上进行对应位置相乘再相加

卷积核:又称为滤波器,可以认为是某种模式,某种特征

卷积过程类似于用一个模板去图像上寻找与它相似的区域,与卷积核模式越相似,激活值越高,从而实现特征提取

nn.Conv2d(in_channels, out_channels,kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode=‘zero’)

功能:对多个二维信号进行二维卷积

主要参数:

in_channels:输入的通道数

out_channels:输出通道数,等价于卷积核的个数

kernel_size:卷积核的尺寸

stride:步长

padding:补边

dilation:空洞卷积的大小,通常用于图像分割

groups:分组卷积的设置,通常用于图像轻量化

bias:偏置

三通道变六通道:

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./download", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

tudui = Tudui()

writer = SummaryWriter("logs")

step = 0

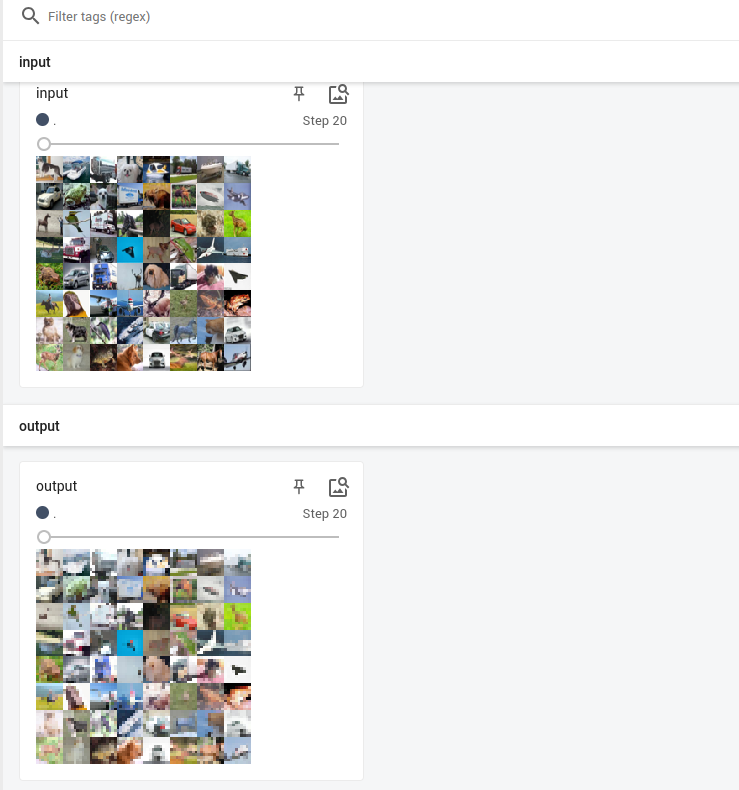

for data in dataloader:

imgs, targets = data

output = tudui(imgs)

print(imgs.shape)

print(output.shape)

# torch.Size([64, 3, 32, 32])

writer.add_images("input", imgs, step)

# torch.Size([64, 6, 30, 30]) -> [xxx, 3, 30, 30]

output = torch.reshape(output, (-1, 3, 30, 30))

writer.add_images("output", output, step)

step = step + 1

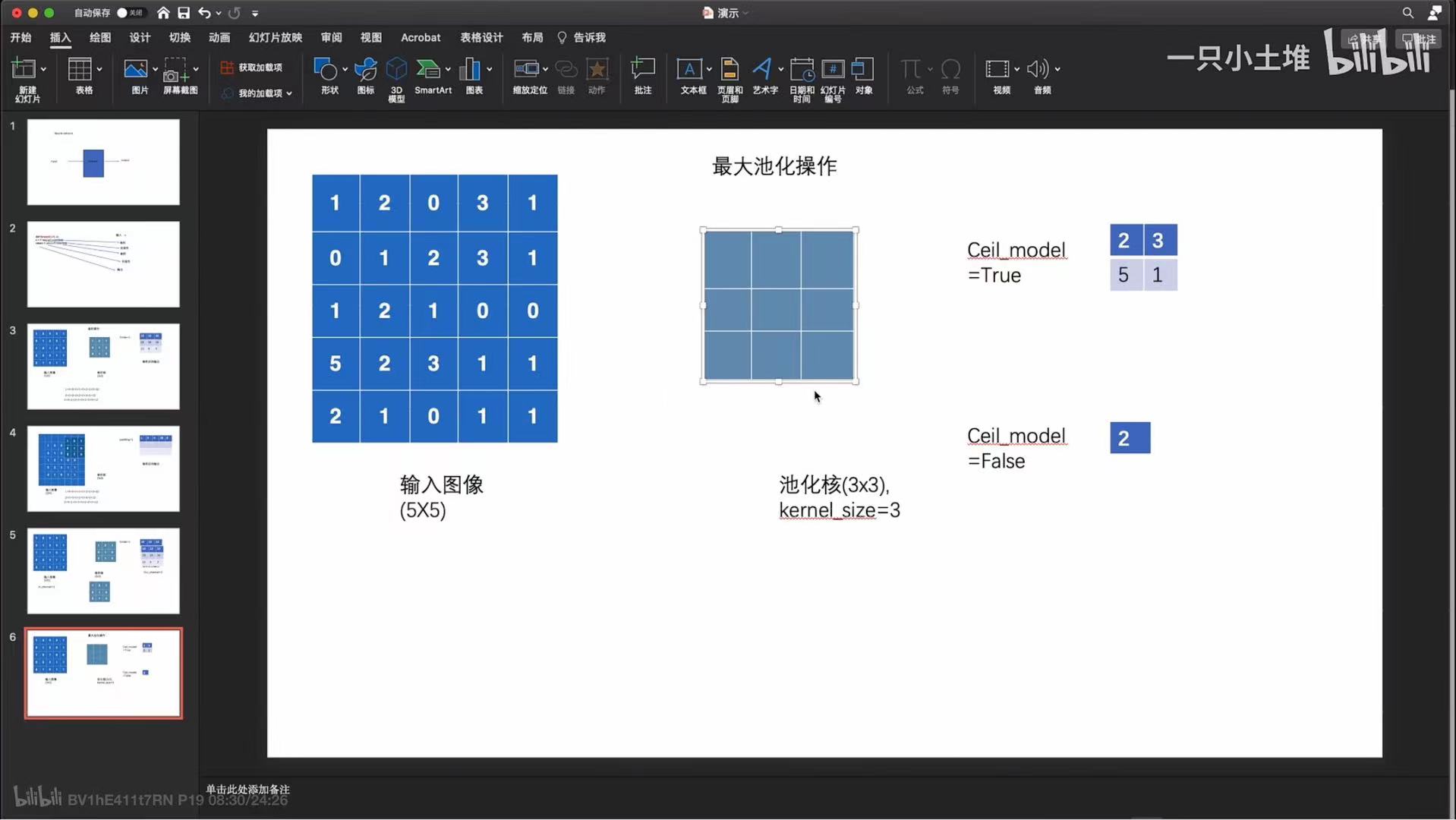

神经网络-最大池化的使用

最大池化层 保留输入的特征,同时减少数据量 加快训练速度

最大池化的作用:保留输入的特征,同时把数据量减少,比如视频变720P

最大池化层的步长默认大小为kernel_size

ceil_mode ceil向上取整,floor向下取整

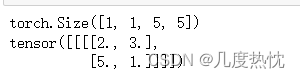

代码示例:

import torch

from torch import nn

from torch.nn import MaxPool2d

input = torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]],dtype=torch.float32)

input = torch.reshape(input,(-1,1,5,5))

print(input.shape)

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3,ceil_mode=True)

def forward(self,input):

output = self.maxpool1(input)

return output

tudui = Tudui()

output = tudui(input)

print(output)

结果:

import torchvision

from torch.utils.data import DataLoader

from torch import nn

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10(root='./data_set', train=False, download=True,

transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.maxpool1 = nn.MaxPool2d(kernel_size=3, ceil_mode=False)

def forward(self, input):

output = self.maxpool1(input)

return output

tudui = Tudui()

writer = SummaryWriter(log_dir='logs_maxpools')

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images("input", imgs, step)

output = tudui(imgs)

writer.add_images("output", output, step)

step = step + 1

writer.close()

效果图:

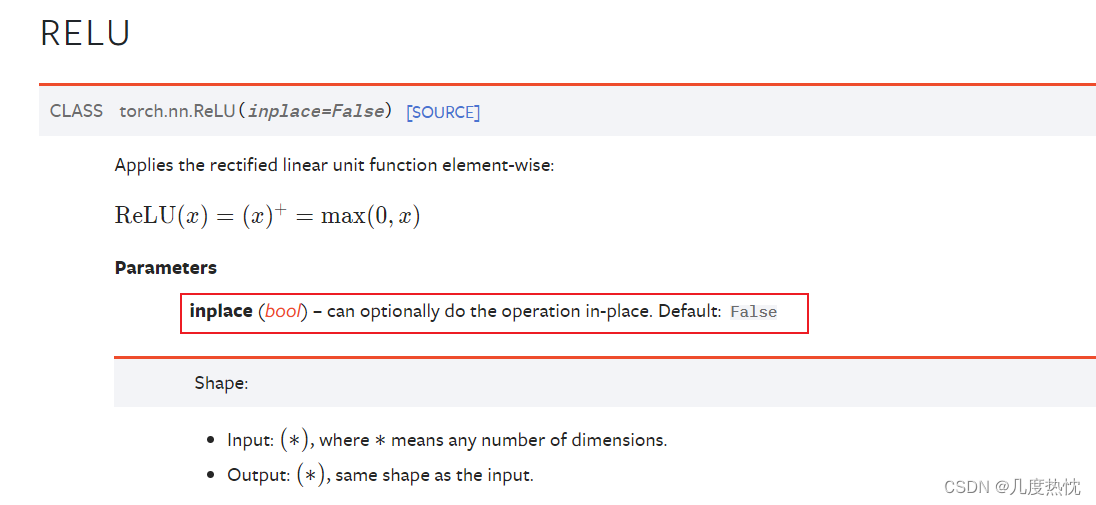

神经网络-非线性激活

非线性激活层:引入非线性的特性,使得神经网络具有更强的表达能力和适应能力

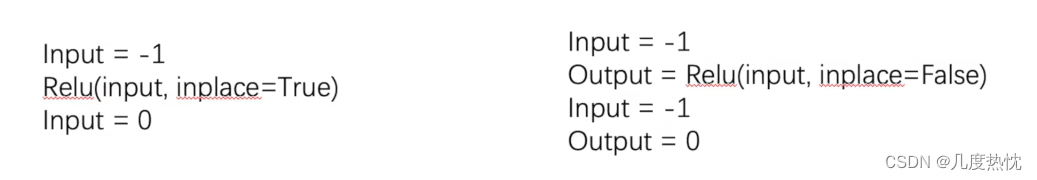

常用函数:

inplace():为True时对原输入进行激活函数的计算,计算结果赋给原输入;为False时,返回对原输入进行激活函数的计算的结果,原输入不发生改变,保留原始数据,默认为False

ReLU的基本使用

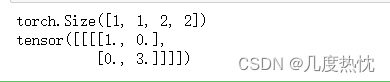

import torch

from torch import nn

from torch.nn import ReLU

input = torch.tensor([[1, -0.5],

[-1, 3]])

input = torch.reshape(input,(-1, 1, 2, 2)) #增加一个batchsize维

print(input.shape)

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.relu1 = ReLU()

def forward(self, input):

output = self.relu1(input)

return output

tudui = Tudui()

output = tudui(input)

print(output)

结果:

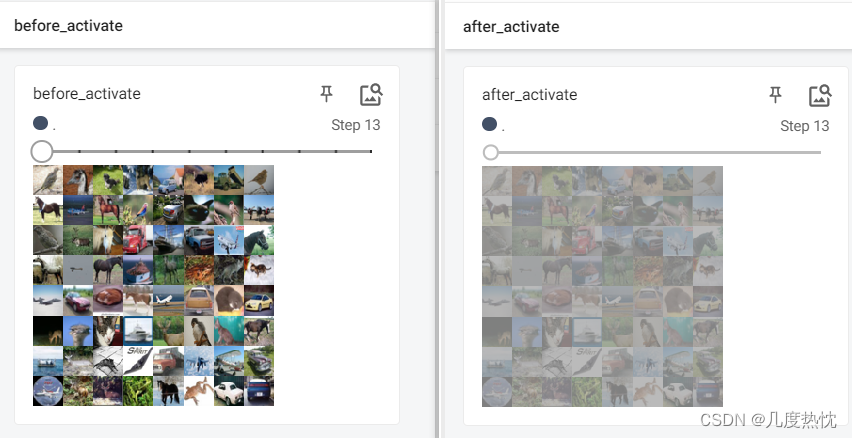

Sigmoid的使用

import torch

import torchvision

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

input = torch.tensor([[1, -0.5],

[-1, 3]])

input = torch.reshape(input, (-1, 1, 2, 2))

print(input.shape)

dataset = torchvision.datasets.CIFAR10("../data_set", train=False, download=True,

transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.relu1 = ReLU()

self.sigmoid1 = Sigmoid()

def forward(self, input):

output = self.sigmoid1(input)

return output

tudui = Tudui()

writer = SummaryWriter("../logs_relu")

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images("input", imgs, global_step=step)

output = tudui(imgs)

writer.add_images("output", output, step)

step += 1

writer.close()

效果演示:

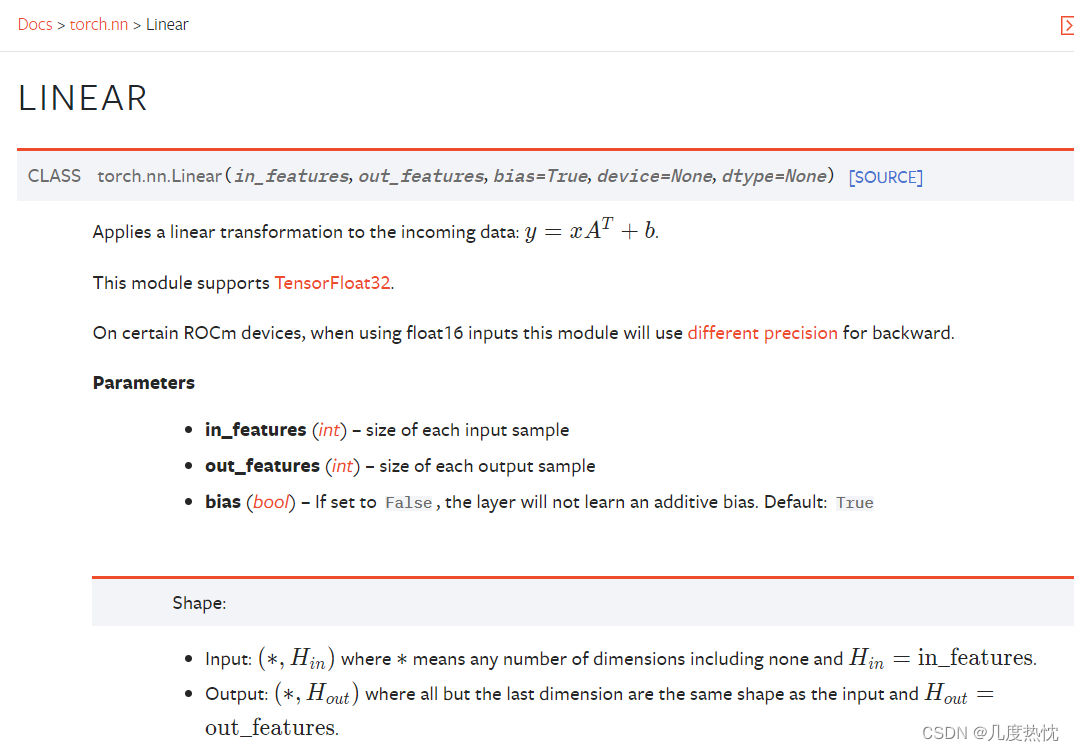

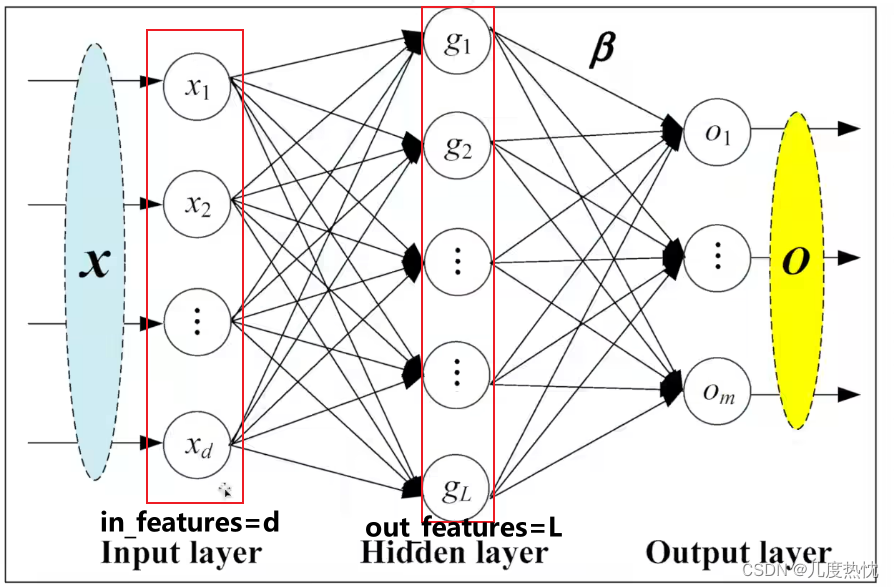

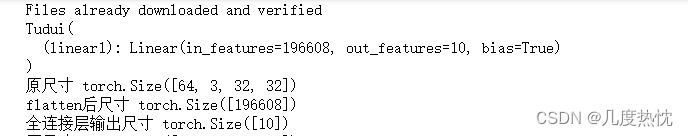

神经网络-线性层及其他层的介绍

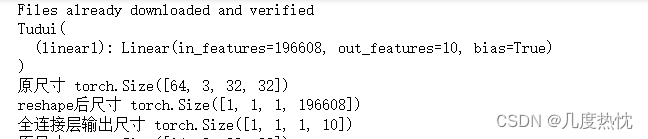

代码:

import torch

import torchvision

from torch.utils.data import DataLoader

from torch import nn

from torch.utils.tensorboard import SummaryWriter

from torch.nn import Linear

dataset = torchvision.datasets.CIFAR10("./dataset",train= False, transform =torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64, drop_last=True)

#搭建网络

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.linear1 = Linear(196608,10)

def forward(self,input):

output = self.linear1(input)

return output

tudui = Tudui()

print(tudui)

for data in dataloader:

imgs, targets = data

print("原尺寸", imgs.shape) #【64,3,32,32】

output = torch.reshape(imgs,(1, 1, 1, -1)) # reshape后 变为【1,1,1,196608】

print("reshape后尺寸",output.shape)

output = tudui(output)

print("全连接层输出尺寸",output.shape)

结果:

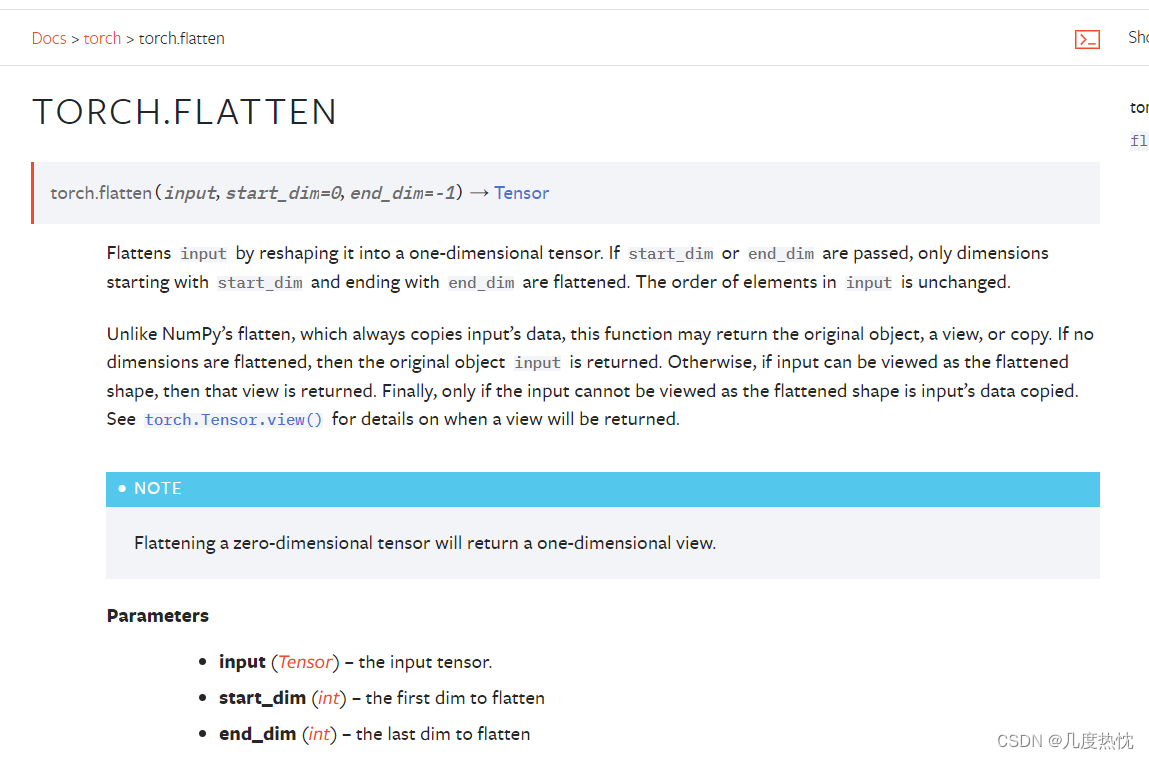

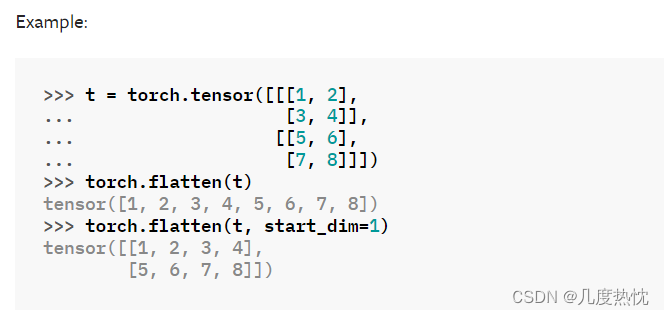

flatten层

flatten层:将输入张量扁平化(flatten)的函数。它将输入张量沿着指定的维度范围进行扁平化处理,并返回一个一维张量作为结果

input: 输入张量,即要进行扁平化操作的张量。

start_dim: 指定开始扁平化的维度。默认为0,即从第0维开始扁平化。

end_dim: 指定结束扁平化的维度(包含在内)。默认为-1,即扁平化至最后一维。

示例:

t = torch.tensor([[[1, 2],

[3, 4]],

[[5, 6],

[7, 8]]])

torch.flatten(t)

torch.flatten(t, start_dim=1)

example :

代码示例:

import torch

import torchvision

from torch.utils.data import DataLoader

from torch import nn

from torch import flatten

from torch.utils.tensorboard import SummaryWriter

from torch.nn import Linear

dataset = torchvision.datasets.CIFAR10("./dataset",train= False, transform =torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64, drop_last=True)

#搭建网络

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.linear1 = Linear(196608,10)

def forward(self,input):

output = self.linear1(input)

return output

tudui = Tudui()

print(tudui)

for data in dataloader:

imgs, targets = data

print("原尺寸", imgs.shape) #【64,3,32,32】

output = flatten(imgs)

print("flatten后尺寸",output.shape)

output = tudui(output)

print("全连接层输出尺寸",output.shape)

结果 :

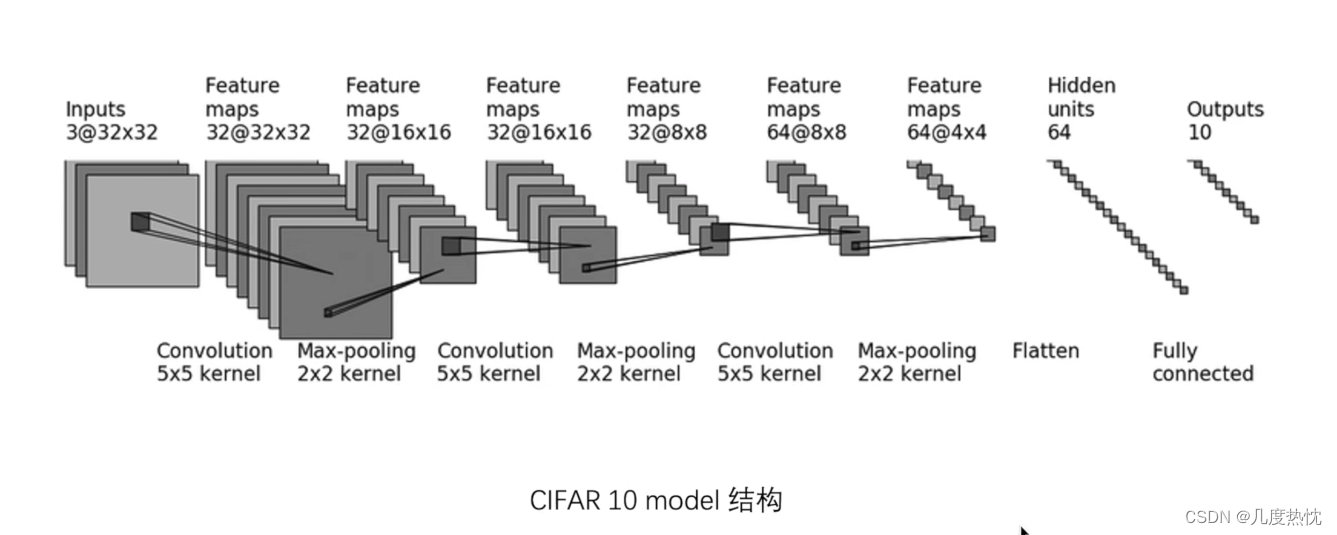

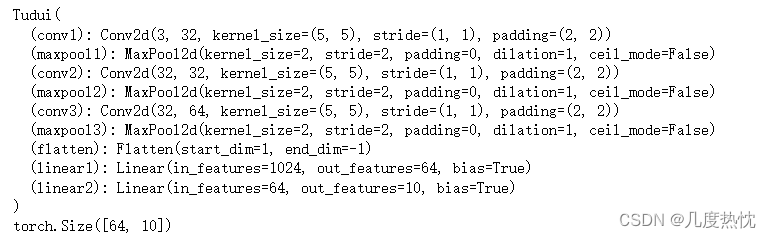

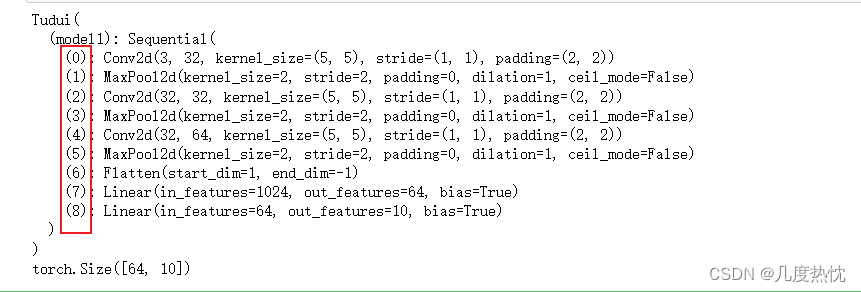

神经网络-搭建小实战和Sequential的使用

Sequential: 顺序容器,可以按照添加的顺序依次执行包含的各个模块,torch.nn.Sequential提供了一种简单的方式来构建神经网络模型,代码十分简洁。

#未使用sequential

from torch import nn

from torch.nn import Module

from torch.nn import Conv2d

from torch.nn import MaxPool2d,Flatten,Linear

import torch

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.conv1 = Conv2d(3, 32, 5, padding=2)

self.maxpool1 = MaxPool2d(2)

self.conv2 = Conv2d(32, 32, 5, padding=2)

self.maxpool2 = MaxPool2d(2)

self.conv3 = Conv2d(32,64,5, padding=2)

self.maxpool3 = MaxPool2d(2)

self.flatten = Flatten()

self.linear1 = Linear(1024,64)

self.linear2 = Linear(64, 10)

def forward(self, x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

x = self.flatten(x)

x = self.linear1(x)

x = self.linear2(x)

return x

tudui = Tudui()

print(tudui)

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

搭建网络并构造33232的数据输入网络,检验网络各层输入输出的正确性

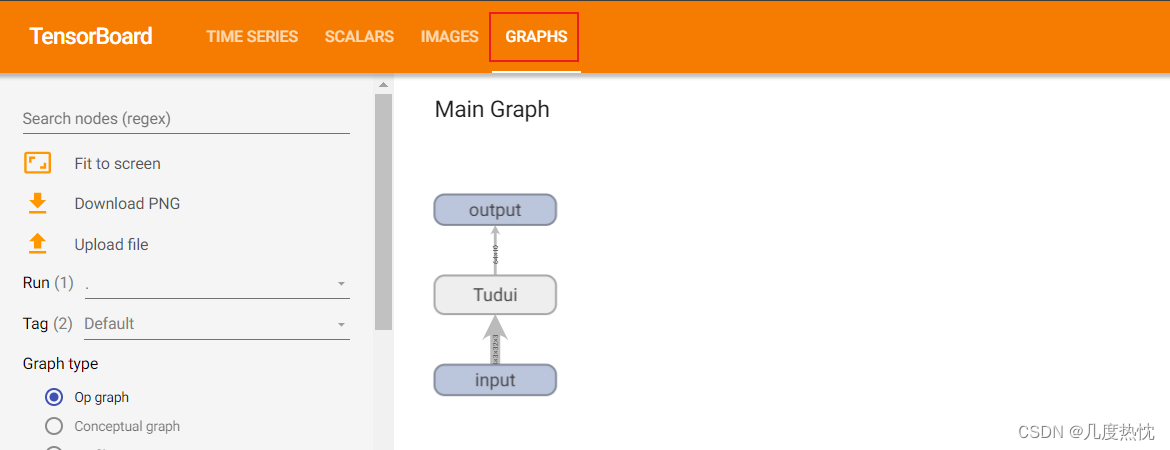

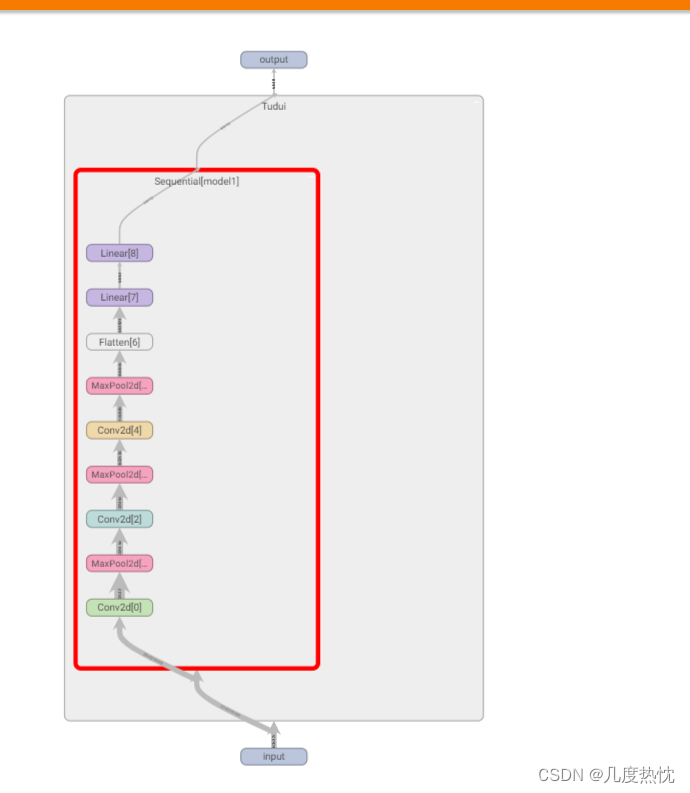

使用Sequential并使用tensorboard添加计算图 :

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriter

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

tudui = Tudui()

print(tudui)

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

writer = SummaryWriter("logs_seq")

writer.add_graph(tudui, input)

writer.close()

结果如图 :

在tensorboard中打开,点击GRAPHS

writer.add_graph(模型, 可选参数–模型的输入数据)是 TensorBoardX 库中用于将模型结构添加到 TensorBoard 中的函数。它可以将 PyTorch 模型的计算图可视化,方便查看模型的层次结构和数据流动

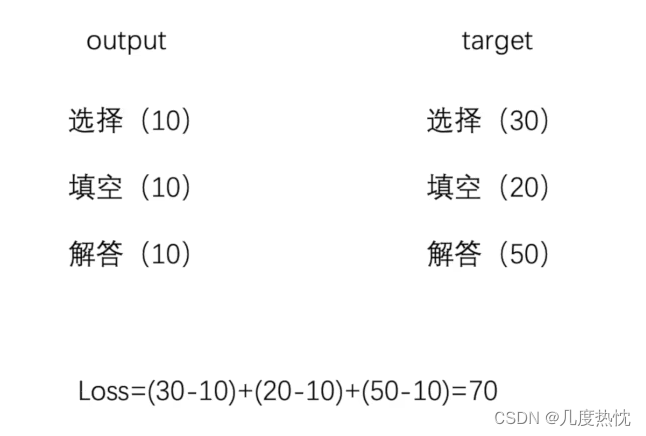

torch.nn的损失函数(loss function)

损失函数(Loss Function)用于衡量模型的预测输出与实际标签之间的差异或者误差,损失越小越好,根据loss调整参数(反向传播),更新输出,减小损失.

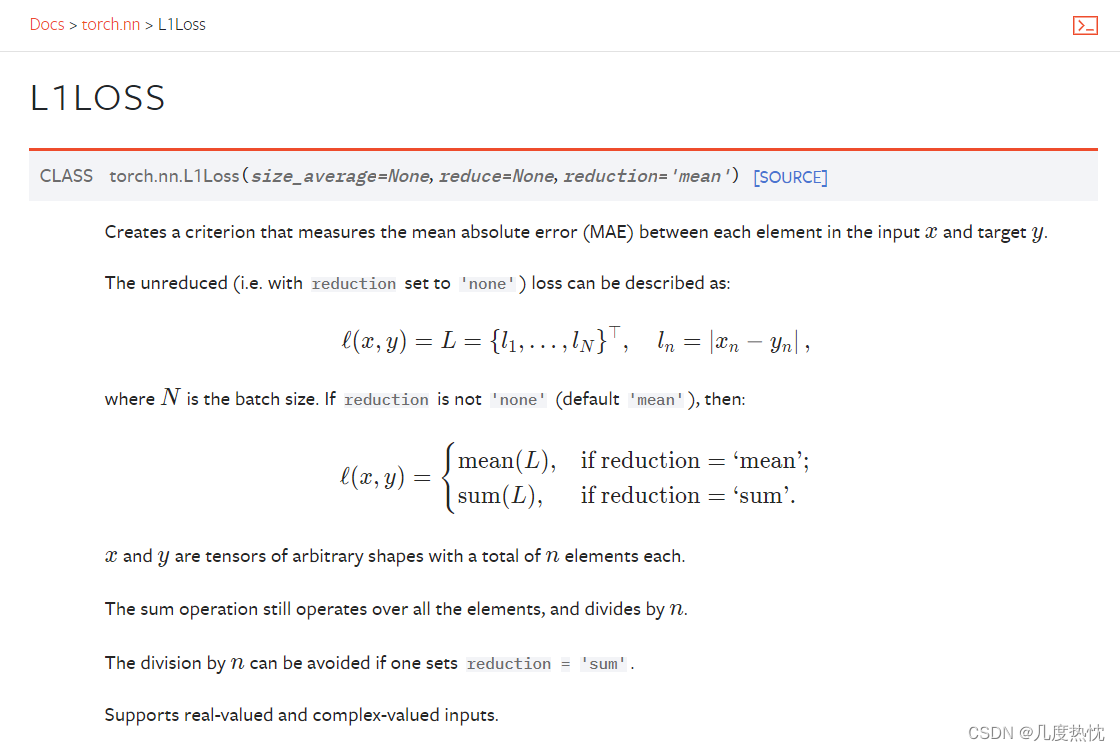

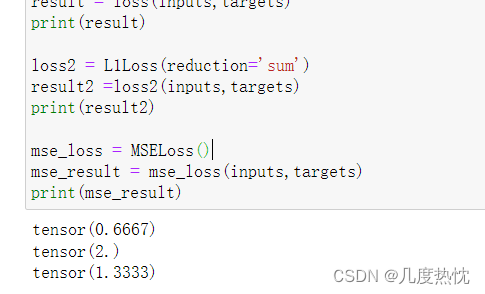

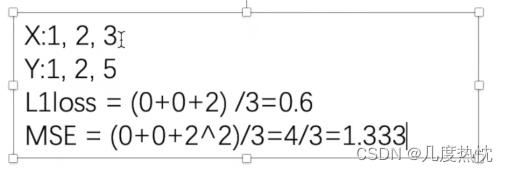

L1Loss(差平均值)

import torch

from torch.nn import L1Loss

inputs = torch.tensor([1,2,3],dtype=torch.float32)

targets = torch.tensor([1,2,5],dtype=torch.float32)

inputs = torch.reshape(inputs, (1,1,1,3))

targets = torch.reshape(targets, (1,1,1,3))

loss = L1Loss()

result = loss(inputs,targets)

print(result)

loss2 = L1Loss(reduction='sum')

result2 =loss2(inputs,targets)

print(result2)

结果:

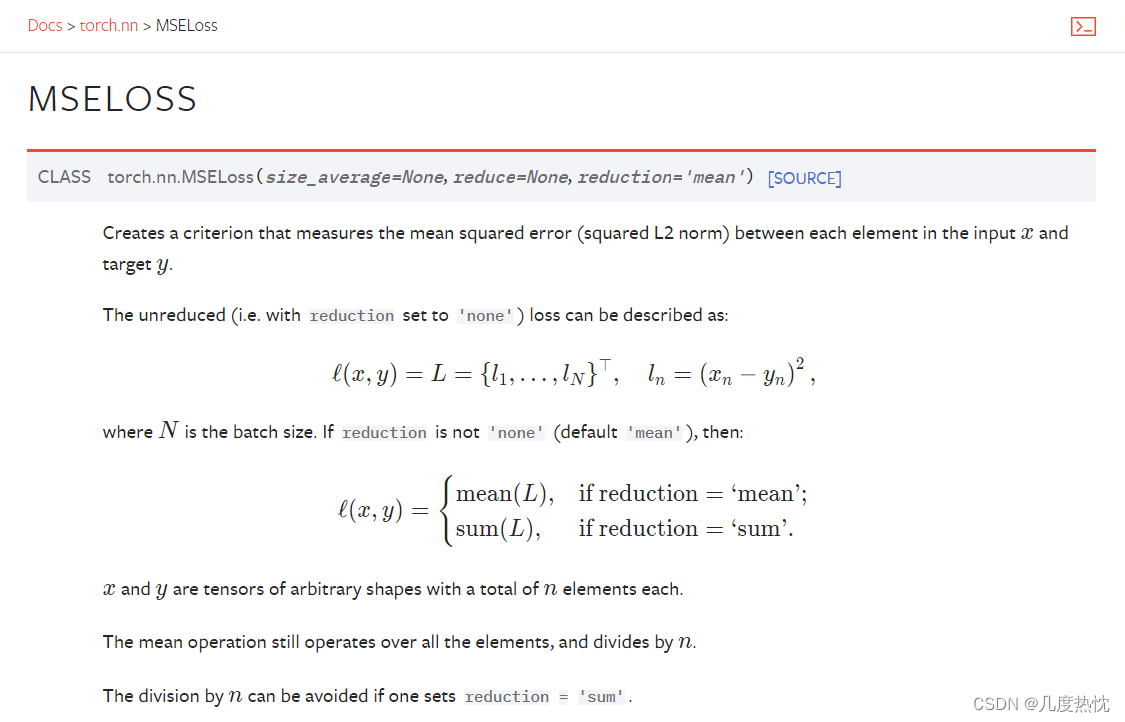

MSELoss(平均方差)

import torch

from torch.nn import L1Loss,MSELoss

inputs = torch.tensor([1,2,3],dtype=torch.float32)

targets = torch.tensor([1,2,5],dtype=torch.float32)

inputs = torch.reshape(inputs, (1,1,1,3))

targets = torch.reshape(targets, (1,1,1,3))

loss = L1Loss()

result = loss(inputs,targets)

print(result)

loss2 = L1Loss(reduction='sum')

result2 =loss2(inputs,targets)

print(result2)

mse_loss = MSELoss()

mse_result = mse_loss(inputs,targets)

print(mse_result)

结果 :

手算结果 :

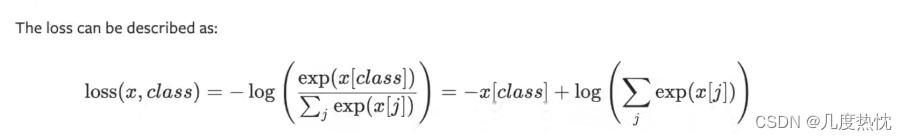

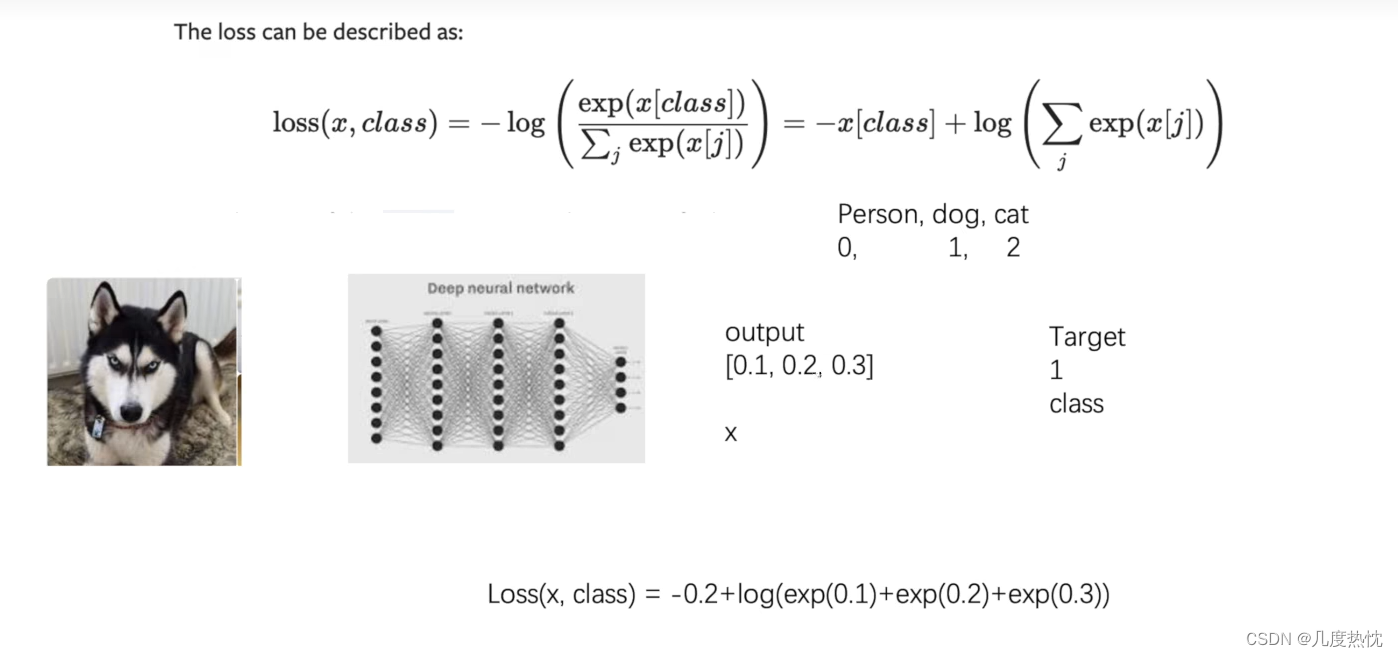

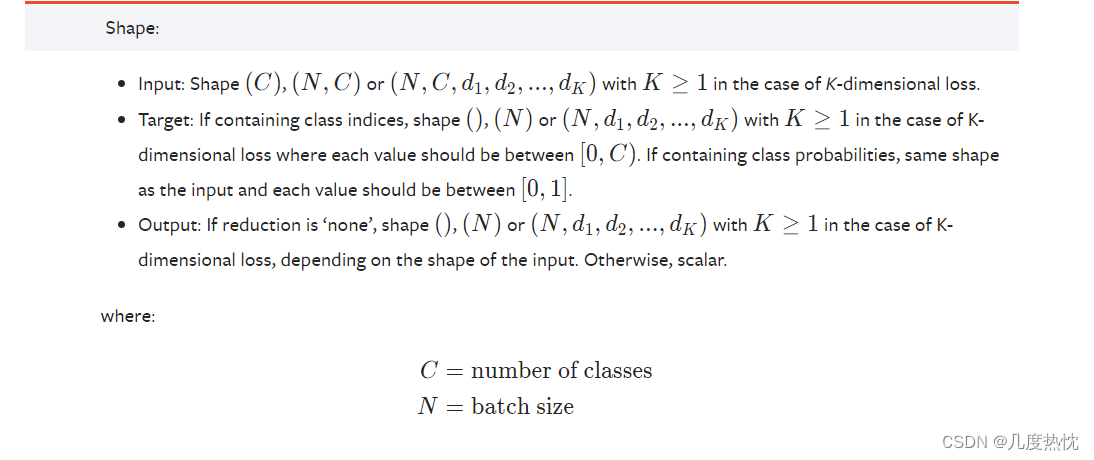

torch.nn.CrossEntropyLoss交叉熵损失函数

交叉熵损失函数(Cross-Entropy Loss Function)是在分类问题中经常使用的一种损失函数,特别是在多分类问题中。它衡量了模型输出的概率分布与真实标签之间的差异,通过最小化交叉熵损失来调整模型参数,使得模型更好地适应分类任务。

当分类预测正确时,损失要比较小;上式两项,左边的项相比右边的项应较大

import torch

from torch.nn import CrossEntropyLoss

x = torch.tensor([0.1, 0.2, 0.3])

y = torch.tensor([1])

x = torch.reshape(x,(1,3))

loss_cross = CrossEntropyLoss()

result_cross = loss_cross(x, y )

print(result_cross)

loss function的使用应根据需求,选定好损失函数后,按损失函数要求的维度(形状)输入

使用前面搭建的网络结合CrossEntropyLoss计算损失

from torch import nn

from torch.nn import Module

from torch.nn import Conv2d

from torch.nn import MaxPool2d,Flatten,Linear, Sequential

import torch

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset",train= False, transform =torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=1, drop_last=True)

#使用sequential

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32,64,5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024,64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

for data in dataloader:

imgs, targets = data

outputs = tudui(imgs)

# print(outputs)

# print(targets)

result_loss = loss(outputs, targets)

print(result_loss)

关于outputs和targets的维度匹配的问题

在你提供的代码中,outputs 和 targets 的维度能够匹配上,这是因为在构建 Tudui 类时,你没有对输入图片进行展平(Flatten),因此输入的图片在经过 Sequential 中的各个层时,维度会保持在图片数据的维度上(例如 [batch_size, channels, height,width]),并且在经过最后一个线性层时会自动展平为 [batch_size, num_classes] 的形状,其中 num_classes 是输出的类别数量。

让我们具体分析一下:

输入的图片 imgs 的维度为 [batch_size, channels, height, width],例如 [1, 3, 32, 32](假设 batch size 为 1,图像大小为 32x32,并且有 3 个通道)。

在 Tudui 类中,通过 Sequential 定义了模型结构,但没有对输入图片进行展平操作,因此保持了输入图片的维度。

经过 Sequential 中的各个层之后,最后一个线性层的输出会自动展平为 [batch_size, num_classes] 的形状,其中 num_classes 是输出的类别数量(这里是 10,因为 CIFAR-10 数据集有 10 个类别)。

因此,在计算损失时,outputs 的维度为 [batch_size, num_classes],与 targets 的维度 [batch_size] 是匹配的,因为交叉熵损失函数 nn.CrossEntropyLoss() 会自动处理这种情况,它期望 outputs 的形状是 [batch_size, num_classes],并且 targets 的形状是 [batch_size],它会自动将 targets 进行 one-hot 编码并计算交叉熵损失。

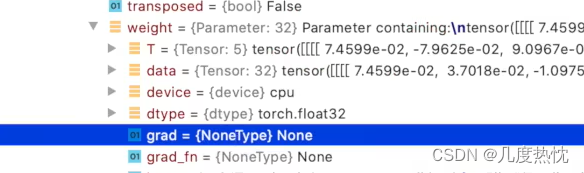

.backward() 反向传播

张量梯度的属性:grad

自动求导(Autograd): 在张量上进行操作时,PyTorch 会自动跟踪操作并构建计算图,可以使用 .backward() 方法(反向传播)计算梯度,然后通过 .grad 属性获取梯度值。

from torch import nn

from torch.nn import Module

from torch.nn import Conv2d

from torch.nn import MaxPool2d,Flatten,Linear, Sequential

import torch

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset",train= False, transform =torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=1, drop_last=True)

#使用sequential

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32,64,5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024,64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

for data in dataloader:

imgs, targets = data

outputs = tudui(imgs)

result_loss = loss(outputs, targets)

result_loss.backward()

print(result_loss)

注意: 如果不使用result_loss.backward()的话,grad属性为None

torch.optim优化器的使用

在深度学习中,optimizer.zero_grad()是一个非常重要的操作,它的含义是将模型参数的梯度清零。

在训练神经网络时,通常采用反向传播算法(Backpropagation)来计算损失函数关于模型参

数的梯度,并利用优化器(optimizer)来更新模型参数以最小化损失函数。在每次反向传播

计算梯度后,梯度信息会被累积在对应的参数张量(tensor)中。如果不清零梯度,在下一

次计算梯度时,这些梯度将会被新计算的梯度累加,导致梯度信息错误。

optimizer.zero_grad()的作用如下:

optim.step()是优化器对象(如SGD、Adam等)的一个方法,用于根据计算得到的梯度更新模型的参数

因此先进行optimizer.zero_grad()再进行计算loss,进行反向传播loss.backward(),之后再进行optim.step()

from torch import nn

from torch.nn import Module

from torch.nn import Conv2d

from torch.nn import MaxPool2d,Flatten,Linear, Sequential

import torch

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset",train= False, transform =torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=1, drop_last=True)

#使用sequential

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32,64,5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024,64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

optim = torch.optim.SGD(tudui.parameters(), lr=0.01, )

for data in dataloader:

imgs, targets = data

outputs = tudui(imgs)

result_loss = loss(outputs, targets)

optim.zero_grad()

result_loss.backward()

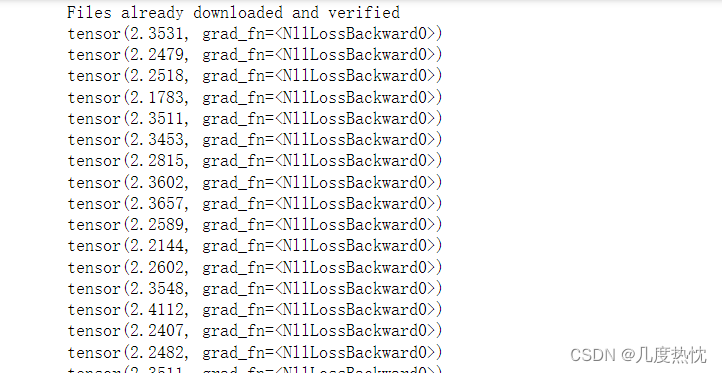

optim.step()

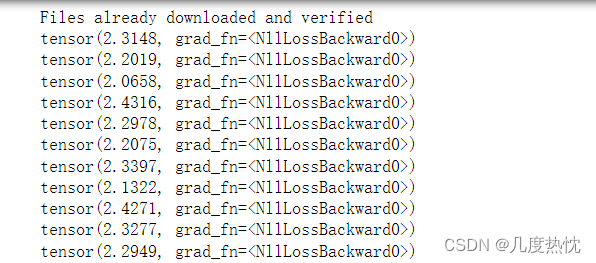

print(result_loss)

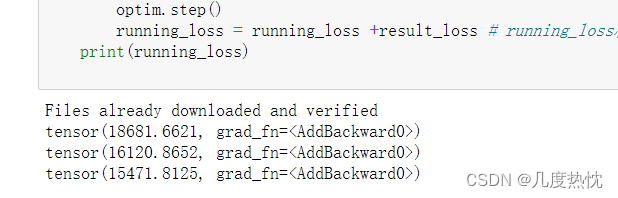

for data in dataloader:只是对整个数据看了一遍,在这个for循环中用这次看到的数据更新网络参数,对下一次看到的数据影响不大,因为只对整个数据看了一遍,这时的loss变化不大.

因此需要引入epoch,对数据多扫几遍

修改后代码如下:

from torch import nn

from torch.nn import Module

from torch.nn import Conv2d

from torch.nn import MaxPool2d,Flatten,Linear, Sequential

import torch

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset",train= False, transform =torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=1, drop_last=True)

#使用sequential

class Tudui(nn.Module):

def __init__(self):

super(Tudui,self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32,64,5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024,64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

tudui = Tudui()

optim = torch.optim.SGD(tudui.parameters(), lr=0.01, )

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs, targets = data

outputs = tudui(imgs)

result_loss = loss(outputs, targets)

optim.zero_grad()

result_loss.backward()

optim.step()

running_loss = running_loss +result_loss # running_loss相当于扫一遍全部数据的loss总和

print(running_loss)

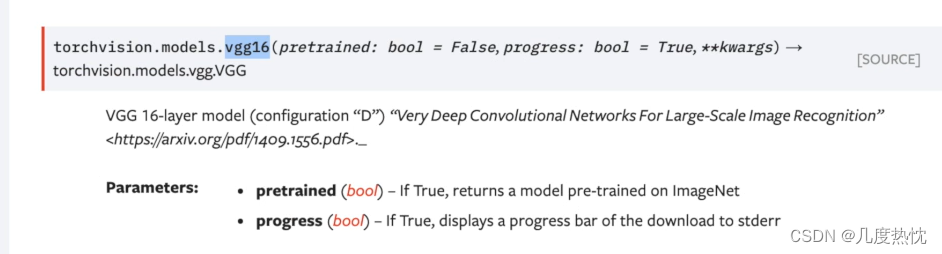

pytorch提供的VGG16

import torchvision

vgg16_false = torchvision.models.vgg16(pretrained=False) #pretrained=False仅加载网络模型,无参数

vgg16_true = torchvision.models.vgg16(pretrained=True) #pretrained=True加载网络模型,并从网络中下载在数据集上训练好的参数

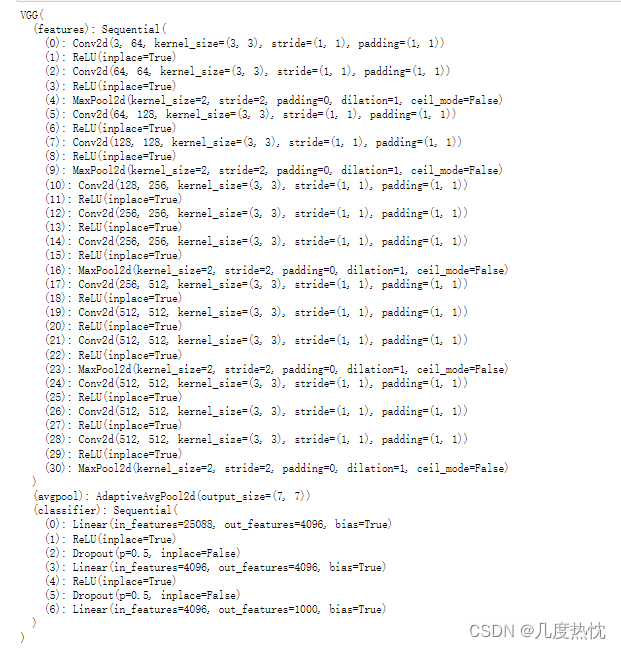

print(vgg16_true)

结果:

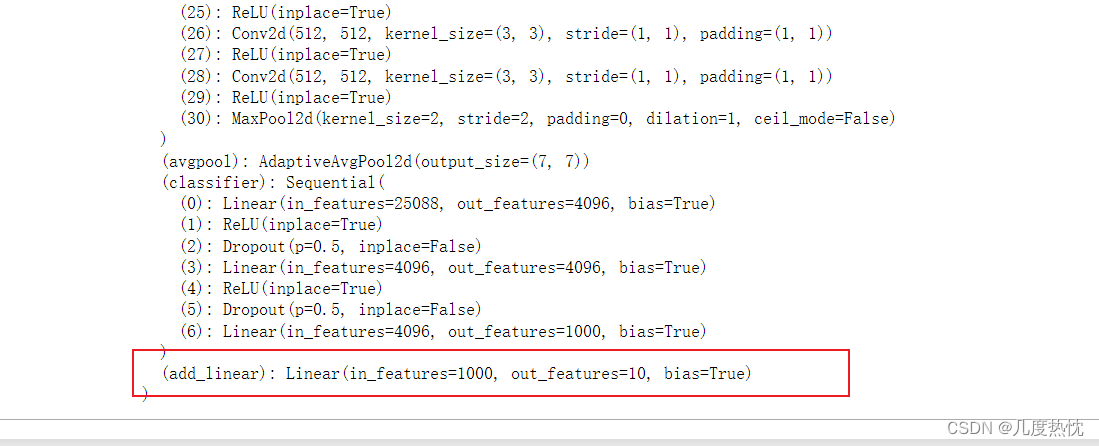

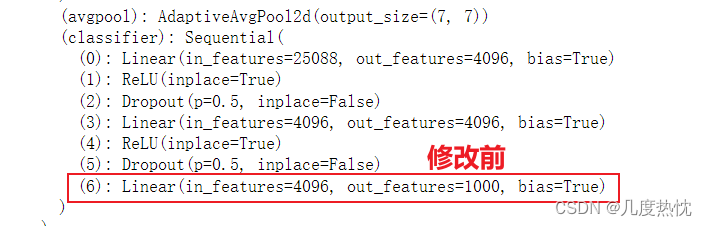

修改vgg16网络模型参数

在模型最后添加线性层:

train_data = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=torchvision.transforms.ToTensor())

vgg16_true.add_module('add_linear', nn.Linear(1000, 10))

print(vgg16_true)

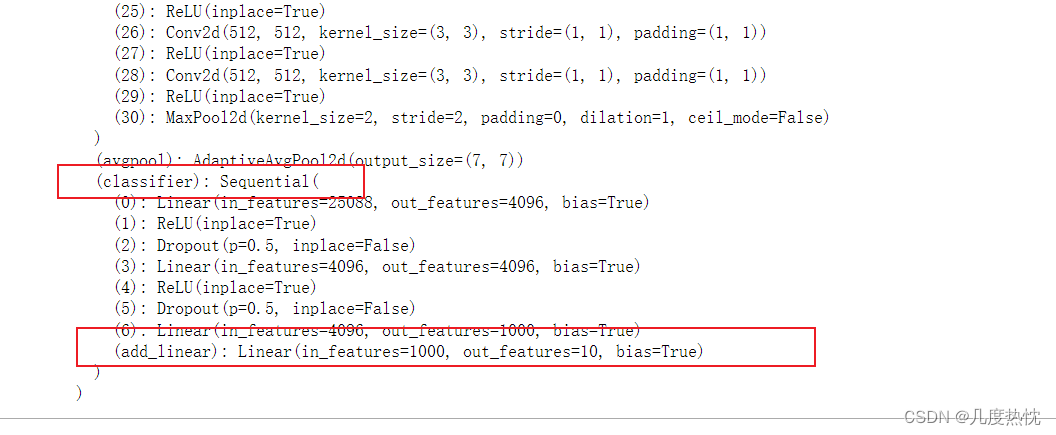

将添加的线性层加在classifier中:

vgg16_true.classifier.add_module('add_linear', nn.Linear(1000, 10))

print(vgg16_true)

不添加层,仅修改vgg原有网络:

vgg16_false.classifier[6] = nn.Linear(4096, 10)

print(vgg16_false)

完整的模型训练和测试套路

model:

# model.py

from torch import nn

import torch

# 搭建神经网络

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, 1, padding=2),

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, 1, padding=2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, 1, padding=2),

nn.MaxPool2d(2),

nn.Flatten(), # 展平后的序列长度为 64*4*4=1024

nn.Linear(1024, 64),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

if __name__ == '__main__':

tudui = Tudui()

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

torch.no_grad()

在推理或评估模型时使用torch.no_grad(),表明当前计算不需要反向传播,使用之后,强制后边的内容不进行计算图的构建

with 语句是 Python 中的一个语法结构,用于包裹代码块的执行,并确保在代码块执行完毕后,能够自动执行一些清理工作

train:

import torch

from torch.utils.tensorboard import SummaryWriter

from model import *

import torchvision

import torch.nn as nn

from torch.utils.data import DataLoader

# 准备数据集

train_data = torchvision.datasets.CIFAR10("./data_set",train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10("./data_set",train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# len()获取数据集长度

train_data_size = len(train_data)

test_data_size = len(test_data)

print("训练数据集的长度为:{}".format(train_data_size))

print("测试数据集的长度为:{}".format(test_data_size))

# 利用dataloader加载数据集

train_dataloader = DataLoader(train_data, batch_size=64, drop_last=True)

test_dataloader = DataLoader(test_data, batch_size=64, drop_last=True)

#创建网络模型

tudui = Tudui()

# 损失函数

loss_fn = nn.CrossEntropyLoss()

# 优化器

learning_rate = 1e-2

optimizer = torch.optim.SGD(tudui.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer = SummaryWriter("logs_train")

for i in range(epoch):

print("-------------第 {} 轮训练开始------------".format(i+1))

#训练步骤开始

for data in train_dataloader:

imgs, targets = data

output = tudui(imgs)

loss = loss_fn(output, targets)

#优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step = total_train_step + 1

if total_train_step % 100 ==0:

print("训练次数:{}, Loss:{}".format(total_train_step, loss.item()))

writer.add_scalar("train_loss",loss.item(), total_train_step)

# 测试步骤开始

total_test_loss = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

outputs = tudui(imgs)

loss = loss_fn(outputs, targets)

total_test_loss = total_test_loss + loss.item()

print("整体测试集上的Loss:{}".format(total_test_loss))

writer.add_scalar("test_loss",total_test_loss, total_test_step)

total_test_step = total_test_step + 1

torch.save(tudui, "tudui_{}.pth".format(i))

print("模型已保存")

writer.close()

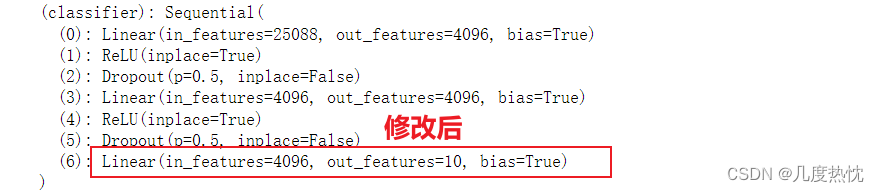

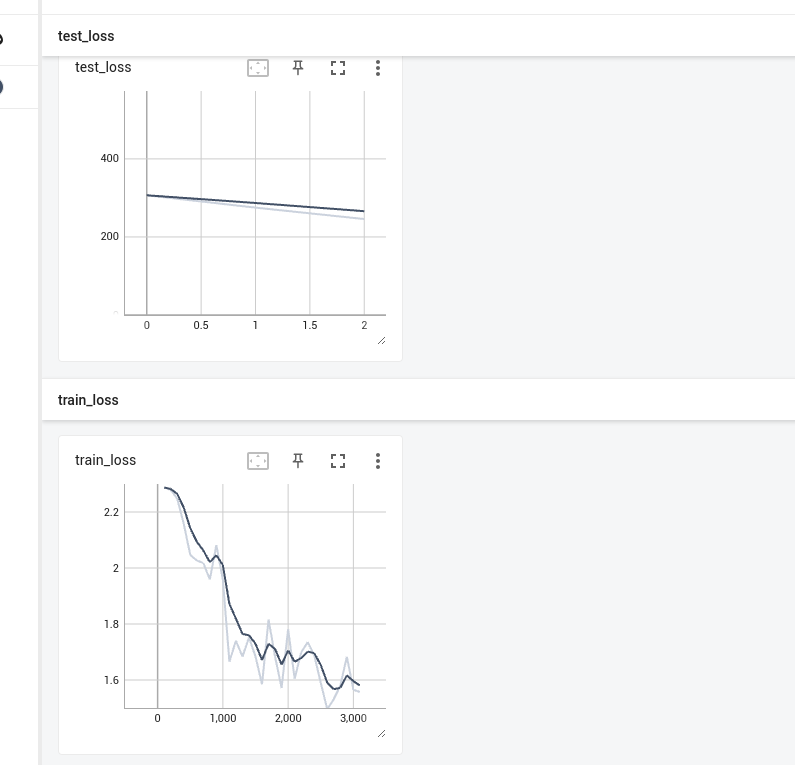

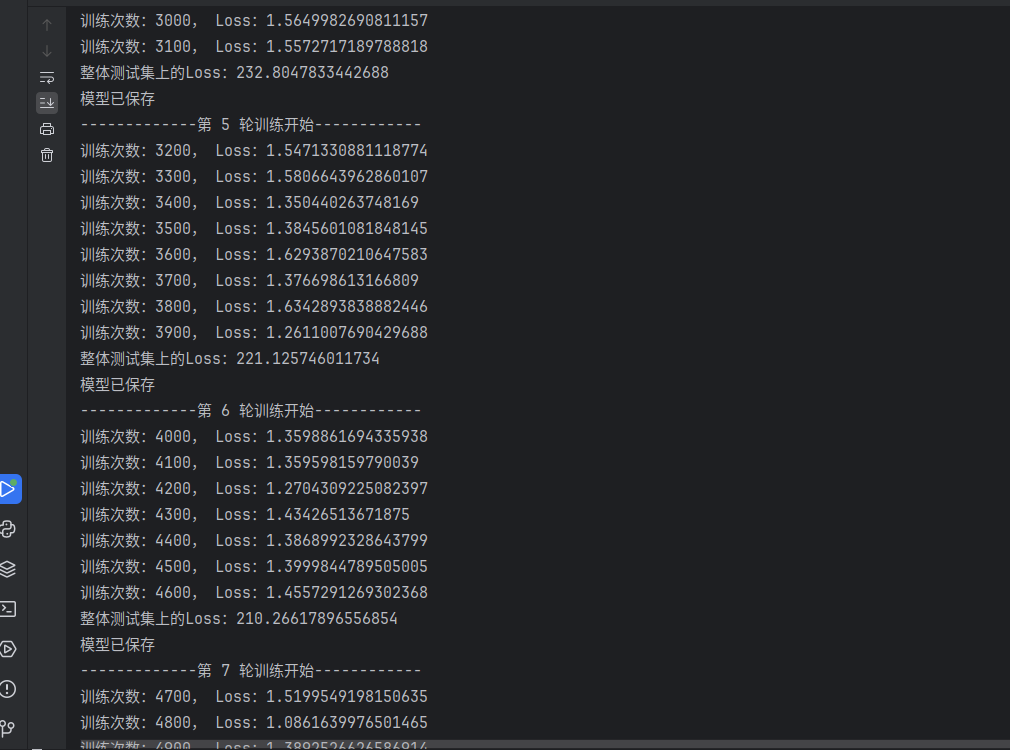

结果:

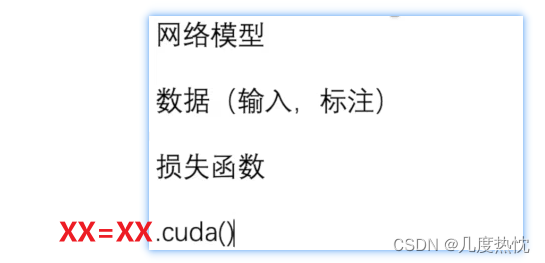

方式一:利用gpu训练

xx = xx.cuda()

训练数据和测试数据都要.cuda()

import torch

from torch.utils.tensorboard import SummaryWriter

import torchvision

import torch.nn as nn

from torch.utils.data import DataLoader

# 准备数据集

train_data = torchvision.datasets.CIFAR10("./data_set",train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10("./data_set",train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# len()获取数据集长度

train_data_size = len(train_data)

test_data_size = len(test_data)

print("训练数据集的长度为:{}".format(train_data_size))

print("测试数据集的长度为:{}".format(test_data_size))

# 利用dataloader加载数据集

train_dataloader = DataLoader(train_data, batch_size=64, drop_last=True)

test_dataloader = DataLoader(test_data, batch_size=64, drop_last=True)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, 1, padding=2),

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, 1, padding=2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, 1, padding=2),

nn.MaxPool2d(2),

nn.Flatten(), # 展平后的序列长度为 64*4*4=1024

nn.Linear(1024, 64),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

#创建网络模型

tudui = Tudui()

if torch.cuda.is_available():

tudui = tudui.cuda()

# 损失函数

loss_fn = nn.CrossEntropyLoss()

if torch.cuda.is_available():

loss_fn = loss_fn.cuda()

# 优化器

learning_rate = 1e-2

optimizer = torch.optim.SGD(tudui.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer = SummaryWriter("logs_train")

for i in range(epoch):

print("-------------第 {} 轮训练开始------------".format(i+1))

#训练步骤开始

for data in train_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

output = tudui(imgs)

loss = loss_fn(output, targets)

#优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step = total_train_step + 1

if total_train_step % 100 ==0:

print("训练次数:{}, Loss:{}".format(total_train_step, loss.item()))

writer.add_scalar("train_loss",loss.item(), total_train_step)

# 测试步骤开始

total_test_loss = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs = imgs.cuda()

targets = targets.cuda()

outputs = tudui(imgs)

loss = loss_fn(outputs, targets)

total_test_loss = total_test_loss + loss.item()

print("整体测试集上的Loss:{}".format(total_test_loss))

writer.add_scalar("test_loss",total_test_loss, total_test_step)

total_test_step = total_test_step + 1

torch.save(tudui, "tudui_{}.pth".format(i))

print("模型已保存")

writer.close()

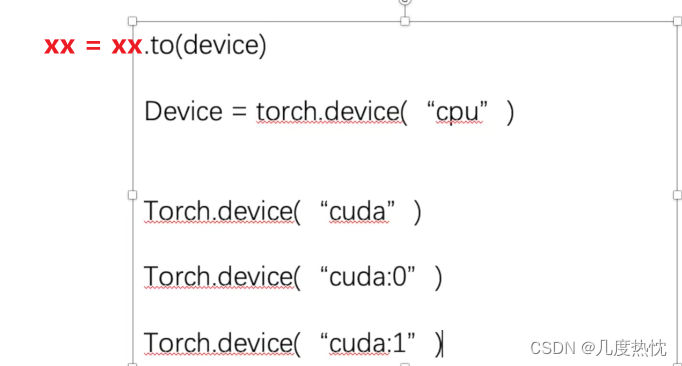

方式二:利用gpu训练

from torch.utils.tensorboard import SummaryWriter

import torch

import torchvision

import torch.nn as nn

from torch.utils.data import DataLoader

# 定义训练的设备

device = torch.device("cuda")

# 准备数据集

train_data = torchvision.datasets.CIFAR10("./dataset",train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10("./dataset",train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# len()获取数据集长度

train_data_size = len(train_data)

test_data_size = len(test_data)

print("训练数据集的长度为:{}".format(train_data_size))

print("测试数据集的长度为:{}".format(test_data_size))

# 利用dataloader加载数据集

train_dataloader = DataLoader(train_data, batch_size=64, drop_last=True)

test_dataloader = DataLoader(test_data, batch_size=64, drop_last=True)

#创建网络模型

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, 1, 2),

nn.MaxPool2d(2),

nn.Flatten(), # 展平后的序列长度为 64*4*4=1024

nn.Linear(1024, 64),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

tudui = Tudui()

tudui = tudui.to(device)

# 损失函数

loss_fn = nn.CrossEntropyLoss()

loss_fn = loss_fn.to(device)

# 优化器

learning_rate = 1e-2

optimizer = torch.optim.SGD(tudui.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer = SummaryWriter("../logs_train")

for i in range(epoch):

print("-------------第 {} 轮训练开始------------".format(i+1))

#训练步骤开始

tudui.train()

for data in train_dataloader:

imgs, targets = data

imgs = imgs.to(device)

targets = targets.to(device)

output = tudui(imgs)

loss = loss_fn(output, targets)

#优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step = total_train_step + 1

if total_train_step % 100 ==0:

print("训练次数:{}, Loss:{}".format(total_train_step, loss.item()))

writer.add_scalar("train_loss",loss.item(), total_train_step)

# 测试步骤开始

tudui.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

imgs = imgs.to(device)

targets = targets.to(device)

outputs = tudui(imgs)

loss = loss_fn(outputs, targets)

total_test_loss = total_test_loss + loss.item()

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy = total_accuracy + accuracy

print("整体测试集上的Loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy/test_data_size))

writer.add_scalar("test_loss",total_test_loss, total_test_step)

writer.add_scalar("test_accuracy", total_accuracy/test_data_size, total_test_step)

total_test_step = total_test_step + 1

torch.save(tudui, "tudui_{}.pth".format(i))

print("模型已保存")

writer.close()

模型和损失函数可以直接model.to() ,model.cuda() ,loss.to(),loss.cuda()而无需赋值

完整的模型验证思路

import torchvision

from PIL import Image

image_path = "./dog.png"

image = Image.open(image_path)

print(image)

image = image.convert('RGB')

transform = torchvision.transforms.Compose([torchvision.transforms.Resize((32,32)),

torchvision.transforms.ToTensor()

])

image = transform(image)

print(image)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, 1, 2),

nn.MaxPool2d(2),

nn.Flatten(), # 展平后的序列长度为 64*4*4=1024

nn.Linear(1024, 64),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

model = torch.load("tudui_0.pth",map_location=torch.device("cuda"))

print(model)

image = torch.reshape(image,(1,3,32,32))

model.eval()

with torch.no_grad():

image = image.to("cuda")

output = model(image)

print(output)

print(output.argmax(1))

使用gpu训练保存的模型在cpu上使用

model = torch.load("XXXX.pth",map_location= torch.device("cpu"))

map_location=torch.device("cpu") 是在使用 PyTorch 的 torch.load 函数加载模型或张量时的一个参数,它用于指定加载数据的目标设备。当你使用这个参数时,你告诉 PyTorch 将加载的数据映射到 CPU 上,而不是默认的 CUDA 设备(如果你的系统上有 GPU)。