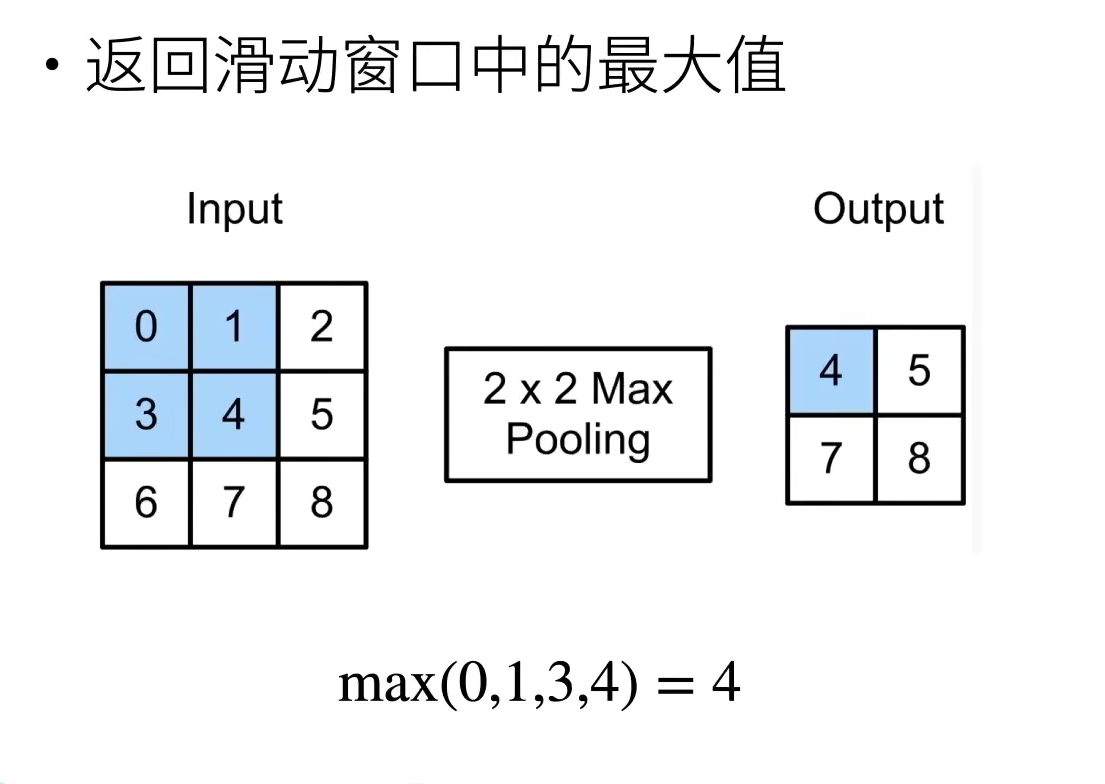

池化层有双重目的:降低卷积层对位置的敏感性,同时降低对空间降采样表示的敏感性

import torch

from torch import nn

from d2l import torch as d2l

# 实现池化层的正向传播

def pool2d(X, pool_size, mode='max'):

p_h, p_w = pool_size # 池化层窗口大小

Y = torch.zeros((X.shape[0] - p_h + 1, X.shape[1] - p_w + 1))

for i in range(Y.shape[0]):

for j in range(Y.shape[1]):

if mode == 'max':

Y[i, j] = X[i: i + p_h, j: j + p_w].max() # 最大汇聚(池化)

elif mode == 'avg':

Y[i, j] = X[i: i + p_h, j: j + p_w].mean() # 平均汇聚(池化)

return Y

# 验证

X = torch.tensor([[0.0, 1.0, 2.0], [3.0, 4.0, 5.0], [6.0, 7.0, 8.0]])

print(pool2d(X, (2, 2)))

print(pool2d(X, (2, 2), mode='avg'))

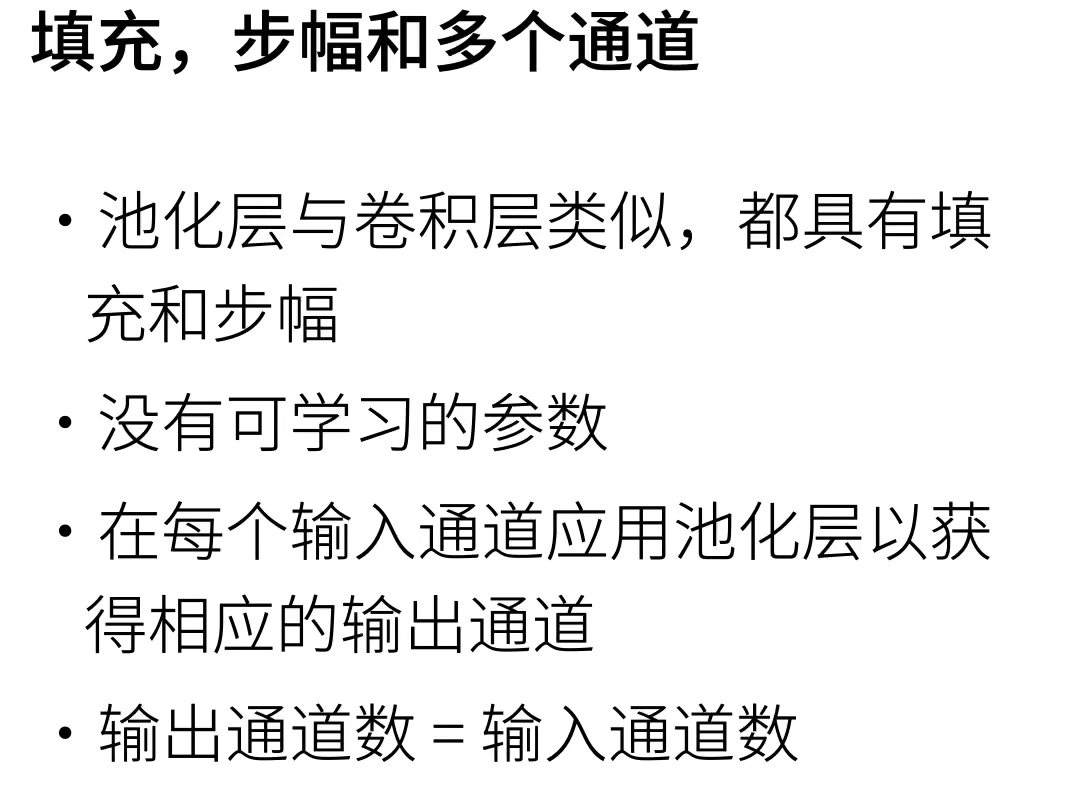

# 填充和步幅

X = torch.arange(16, dtype=torch.float32).reshape((1, 1, 4, 4))

print(X)

# 默认步幅与汇聚窗口的大小相同

pool2d = nn.MaxPool2d(3)

print(pool2d(X))

# 填充和步幅可以手动设定

pool2d = nn.MaxPool2d(3, padding=1, stride=2)

print(pool2d(X))

# 若有多个通道,池化层在每个输入通道上单独运算

X = torch.cat((X, X + 1), 1)

print(X)

pool2d = nn.MaxPool2d(3, padding=1, stride=2)

print(pool2d(X))